In a world where artificial intelligence (AI) is becoming increasingly prevalent, it’s essential for the everyday user to understand the complex jargon associated with this rapidly evolving technology. This comprehensive guide aims to decode the mysteries of AI terminology, providing a user-friendly resource for anyone looking to navigate this exciting field. From machine learning to neural networks, you’ll gain a clearer understanding of the language often used in conversations around AI. So, whether you’re a tech-savvy enthusiast or a curious beginner, this article is your ticket to unlocking the secrets of AI jargon.

Machine Learning

Machine learning is a fascinating field of artificial intelligence that focuses on developing algorithms and models that allow computers to learn and make predictions or decisions without being explicitly programmed. There are several different types of machine learning, including supervised learning, unsupervised learning, and reinforcement learning.

1.1 Supervised Learning

Supervised learning is a type of machine learning where the algorithm learns from labeled data. In this approach, the algorithm is provided with a set of input-output pairs, known as training data, and its task is to learn a mapping function that can predict the correct output given a new input. Examples of supervised learning algorithms include linear regression, support vector machines, and decision trees.

Supervised learning is widely used in various applications, such as image recognition, speech recognition, and natural language processing. It is particularly valuable when there is a large amount of labeled data available, as the algorithm can leverage this information to improve its predictions.

1.2 Unsupervised Learning

Unsupervised learning is another type of machine learning where the algorithm learns from unlabeled data. In this approach, the algorithm is not given any specific labels or outputs to predict. Instead, it focuses on finding patterns or structures in the data on its own. Some common techniques used in unsupervised learning include clustering and dimensionality reduction.

Unsupervised learning is useful in scenarios where there is a scarcity of labeled data or the underlying structure of the data is unknown. It can be applied in areas such as customer segmentation, anomaly detection, and recommendation systems.

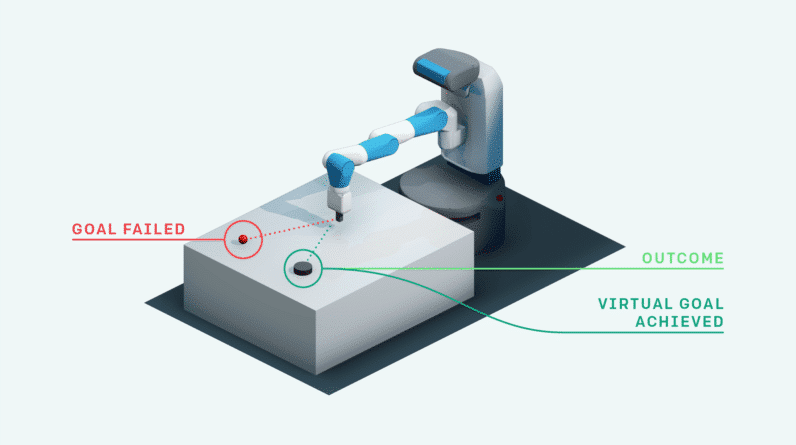

1.3 Reinforcement Learning

Reinforcement learning is a branch of machine learning that deals with learning from interactions with an environment. In this approach, an agent learns to maximize its performance in a given environment by taking actions and receiving rewards or punishments based on its actions. The agent uses these rewards as feedback to update its learning and improve its decision-making process.

Reinforcement learning is commonly used in areas such as robotics, game playing, and autonomous driving. It allows machines to learn and adapt through trial and error, leading to the development of intelligent and autonomous systems.

Deep Learning

Deep learning is a subset of machine learning that focuses on training artificial neural networks with multiple layers, also known as deep neural networks. Deep learning algorithms aim to mimic the structure and function of the human brain, allowing computers to process and understand complex patterns and data.

2.1 Neural Networks

Neural networks are the fundamental building blocks of deep learning. They consist of interconnected nodes, called neurons, organized in layers. Each neuron takes input from multiple sources, performs a series of calculations, and produces an output that is passed to the next layer. Neural networks can have multiple hidden layers, allowing them to model complex relationships between inputs and outputs.

Neural networks are widely used in image recognition, natural language processing, and speech recognition. They are capable of capturing intricate patterns and features in data, making them powerful tools for solving complex problems.

2.2 Convolutional Neural Networks (CNNs)

Convolutional neural networks, or CNNs, are a specific type of neural network designed for processing data with a grid-like structure, such as images. CNNs leverage the concept of convolution, which involves applying filters or kernels to the input data to extract relevant features. These features are then passed through layers of neurons for further processing and analysis.

CNNs have revolutionized computer vision tasks, such as object detection and image classification. Their ability to capture spatial information and hierarchical representations makes them highly effective in analyzing visual data.

2.3 Recurrent Neural Networks (RNNs)

Recurrent neural networks, or RNNs, are a type of neural network that is designed to handle sequential data. Unlike traditional feedforward neural networks, RNNs have feedback connections between the neurons, allowing them to retain information about previous inputs. This ability makes RNNs well-suited for tasks such as natural language processing and speech recognition.

RNNs excel at capturing temporal dependencies and context in data, making them ideal for tasks that involve sequences, such as text generation and language translation.

2.4 Generative Adversarial Networks (GANs)

Generative adversarial networks, or GANs, are a class of deep learning models that consist of two neural networks: a generator and a discriminator. The generator network generates synthetic data samples, while the discriminator network learns to distinguish between real and generated samples. The two networks play a min-max game, where the generator tries to deceive the discriminator, and the discriminator tries to correctly identify the real data.

GANs have gained significant attention in recent years for their ability to generate realistic images, audio, and text. They have applications in areas such as image synthesis, data augmentation, and drug discovery.

Natural Language Processing (NLP)

Natural language processing is a subfield of artificial intelligence that focuses on enabling computers to understand, interpret, and generate human language. NLP combines techniques from linguistics, computer science, and machine learning to process, analyze, and derive meaning from text or speech data.

3.1 Sentiment Analysis

Sentiment analysis, also known as opinion mining, is a common application of NLP that involves determining the sentiment or emotion expressed in a piece of text. This could be identifying whether a customer review is positive or negative or analyzing social media posts to gauge public opinion on a certain topic.

Sentiment analysis algorithms use various techniques, including machine learning and natural language understanding, to classify text into different sentiment categories, such as positive, negative, or neutral. These algorithms can be trained on labeled data to learn patterns and linguistic cues associated with different sentiments.

3.2 Named Entity Recognition (NER)

Named Entity Recognition, or NER, is a task in NLP that involves identifying and classifying named entities in text, such as names of people, organizations, locations, dates, and other specific items. NER algorithms use techniques like part-of-speech tagging and machine learning to analyze the context and structure of a sentence and determine the entities present.

NER is a crucial component in many NLP applications, including information retrieval, question answering, and semantic search. It enables computers to understand the entities mentioned in a document, providing valuable insights for various tasks.

3.3 Language Generation

Language generation refers to the process of generating human-like text or speech by a computer system. This involves not only generating grammatically correct sentences but also making them coherent and contextually relevant. Language generation algorithms use techniques such as language modeling, deep learning, and rule-based approaches to produce meaningful and fluent text.

Language generation has applications in chatbots, virtual assistants, and automated content generation. It enables computers to communicate effectively and produce text that is indistinguishable from human-written content.

Computer Vision

Computer vision is a branch of artificial intelligence that focuses on enabling computers to interpret and understand visual information from the physical world, such as images and videos. Computer vision algorithms aim to replicate the human visual system’s capabilities to analyze and extract meaningful information from visual data.

4.1 Object Detection

Object detection is a computer vision task that involves detecting and localizing objects of interest within an image or video. Algorithms for object detection use various techniques, such as feature extraction, machine learning, and deep learning, to identify the presence of objects and provide bounding boxes around them.

Object detection has numerous applications, including surveillance, autonomous driving, and augmented reality. It enables machines to understand their surroundings and interact with the physical world.

4.2 Image Classification

Image classification is a fundamental task in computer vision that involves categorizing images into different classes or categories. Algorithms for image classification learn patterns and features from labeled training data and use them to predict the class of unseen images.

Image classification has a wide range of applications, including medical diagnosis, face recognition, and content filtering. It allows computers to understand the content of an image and make intelligent decisions based on that understanding.

4.3 Image Segmentation

Image segmentation is a computer vision task that involves dividing an image into multiple segments or regions based on their visual properties, such as color, texture, or shape. Algorithms for image segmentation aim to identify and segment different objects or regions within an image.

Image segmentation has applications in various fields, including medical imaging, autonomous driving, and object recognition. It enables machines to extract detailed information from images and perform more precise analysis and understanding.

Big Data

Big data refers to large and complex datasets that cannot be processed or analyzed using traditional data processing techniques. The field of big data focuses on developing tools, techniques, and methodologies to handle, store, process, and analyze massive amounts of data.

5.1 Data Collection

Data collection is the process of gathering and acquiring data from various sources, such as sensors, social media, or web scraping. With the advent of the internet and connected devices, there has been an exponential increase in the availability of data, making data collection a crucial step in big data analytics.

Data collection methodologies can include manual data entry, data extraction from databases, or real-time data streaming. The collected data is then processed and transformed into a suitable format for further analysis.

5.2 Data Processing

Data processing involves transforming raw data into a usable format for analysis and extracting valuable insights. This step typically involves cleaning the data by removing inconsistencies, handling missing values, and dealing with outliers or noise. Data processing also includes data integration, transformation, aggregation, and reduction to enhance the quality and usefulness of the data.

Data processing techniques can vary depending on the nature and structure of the data. Big data technologies, such as Apache Hadoop and Apache Spark, provide scalable and distributed processing capabilities to handle large volumes of data efficiently.

5.3 Data Analysis

Data analysis is the process of examining and interpreting data to extract meaningful insights and patterns. In the context of big data, data analysis techniques need to be scalable and efficient to handle the large volumes of data involved.

Data analysis can involve various statistical, machine learning, and data mining techniques to discover hidden patterns, relationships, or trends in the data. These insights can help businesses make informed decisions, optimize processes, and drive innovation.

Algorithm

Algorithms form the core of machine learning and artificial intelligence systems. An algorithm is a step-by-step procedure or set of rules designed to solve a specific problem or accomplish a particular task. Different types of algorithms are used in machine learning, depending on the problem and the desired outcome.

6.1 Supervised Algorithm

Supervised algorithms are a type of machine learning algorithm that learns from labeled data to make predictions or decisions. These algorithms are trained on input-output pairs, where the input represents the features or attributes, and the output represents the target or desired outcome.

Supervised algorithms employ techniques such as regression, classification, and support vector machines to learn the underlying patterns in the data and make accurate predictions on new, unseen data. These algorithms are widely used in tasks such as object recognition, spam filtering, and fraud detection.

6.2 Unsupervised Algorithm

Unsupervised algorithms are machine learning algorithms that aim to find structure or patterns in unlabeled data. Unlike supervised learning, unsupervised learning does not require the presence of explicit labels or target values.

Unsupervised algorithms use techniques such as clustering, dimensionality reduction, and anomaly detection to discover hidden relationships or groupings in the data. These algorithms are commonly used in exploratory data analysis, market segmentation, and recommendation systems.

6.3 Reinforcement Learning Algorithm

Reinforcement learning algorithms are a type of machine learning algorithm that learns through interaction with an environment. In reinforcement learning, an agent learns to take actions in an environment to maximize cumulative rewards or outcomes.

Reinforcement learning algorithms employ techniques such as Q-learning, policy gradients, and deep reinforcement learning to learn optimal strategies and make decisions in dynamic and uncertain environments. These algorithms are widely used in robotics, game playing, and autonomous systems.

Neural Network Architecture

Neural network architecture refers to the structure and organization of the interconnected nodes, or neurons, in a neural network. Different neural network architectures are designed to handle different types of data or tasks effectively.

7.1 Feedforward Neural Networks

Feedforward neural networks, also known as multilayer perceptrons (MLPs), are the simplest and most common type of neural network architecture. In a feedforward neural network, information flows only in one direction, from the input layer to the output layer, without any feedback connections.

Feedforward neural networks are widely used in various applications, such as image recognition, speech recognition, and natural language processing. They can model complex nonlinear relationships and capture intricate patterns in the data.

7.2 Recurrent Neural Networks (RNNs)

Recurrent neural networks, or RNNs, are a type of neural network architecture that introduces feedback connections between the neurons. This allows RNNs to retain information about previous inputs or states, enabling them to model sequential or time-dependent data.

RNNs are particularly well-suited for tasks that involve sequential data, such as natural language processing, speech recognition, and time series analysis. They can effectively capture temporal dependencies and context in the data, making them powerful tools for sequential modeling.

7.3 Convolutional Neural Networks (CNNs)

Convolutional neural networks, or CNNs, are a specialized type of neural network architecture designed for processing grid-like data, such as images or videos. CNNs leverage the concept of convolution, where filters or kernels are applied to the input data to extract relevant features.

CNNs have revolutionized computer vision tasks, such as object detection and image classification. Their ability to capture spatial information and hierarchical representations makes them highly effective in analyzing visual data.

7.4 Long Short-Term Memory (LSTM)

Long Short-Term Memory, or LSTM, is a specific type of recurrent neural network architecture designed to address the problem of vanishing gradients in traditional RNNs. LSTMs introduce additional gating mechanisms that selectively learn and forget information over time.

LSTMs are particularly effective in modeling sequences with long-term dependencies or gaps in the data. They have applications in tasks such as speech recognition, machine translation, and sentiment analysis.

Artificial General Intelligence (AGI)

Artificial General Intelligence, or AGI, refers to the hypothetical intelligence of a machine that can understand or learn any intellectual task that a human being can do. AGI represents the ability of a machine to perform any cognitive task or solve any problem that a human can.

8.1 Turing Test

The Turing Test, proposed by the mathematician and computer scientist Alan Turing, is a test of a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. In the Turing Test, a human evaluator engages in a conversation with a machine and determines whether they can distinguish between the machine and another human based solely on their responses.

The Turing Test is a benchmark for AGI and serves as a measure of machine intelligence. If a machine can pass the Turing Test, it would indicate a level of artificial intelligence comparable to human intelligence.

8.2 Strong AI

Strong AI, or artificial general intelligence, refers to a system capable of understanding or performing any intellectual task that a human being can do. Strong AI aims to replicate human-level intelligence and consciousness in a machine.

Although strong AI remains a matter of speculation and ongoing research, its potential implications and possibilities are vast. Strong AI could revolutionize fields such as healthcare, education, and scientific research, and lead to significant advancements in technology and society.

8.3 Weak AI

Weak AI, also known as narrow AI, refers to systems that are designed to perform specific tasks or solve specific problems. Weak AI is typically designed to excel in a particular domain or achieve a specific goal, without possessing the broad range of capabilities associated with human intelligence.

Weak AI systems exist in various applications, such as virtual assistants, recommendation systems, and autonomous vehicles. Although they may exhibit impressive performance within their specialized tasks, weak AI systems are limited to specific domains and lack generalized intelligence.

Bias in AI

Bias in AI refers to the potential for machine learning algorithms or systems to produce unfair or discriminatory outcomes due to the data or assumptions used in their development. The presence of bias can result in inequitable treatment or unequal opportunities for certain individuals or groups.

9.1 Data Bias

Data bias refers to biases present in the training data used to develop machine learning models. If the training data is biased or unrepresentative of the population or scenarios to which the model will be applied, the model may produce biased outputs or decisions.

Data bias can occur due to various factors, such as the underrepresentation of certain groups in the training data or the presence of skewed or discriminatory labels. Addressing data bias involves ensuring diverse and balanced training data, evaluating the impact of bias on model performance, and implementing mitigation strategies.

9.2 Algorithmic Bias

Algorithmic bias refers to biases that emerge in machine learning algorithms due to the way they are designed, trained, or deployed. Biases can be unintentionally introduced during algorithm development, leading to unfair outcomes or biased decisions.

Algorithmic bias can arise from factors such as biased training data, biased model architecture, or biased decision-making criteria. Mitigating algorithmic bias involves careful consideration of the ethical implications of algorithm design, the evaluation of model fairness, and transparency in decision-making processes.

9.3 Ethical Implications

Bias in AI and machine learning systems raises significant ethical concerns and implications. Unfair or discriminatory outcomes can lead to social inequities, reinforce existing biases, or perpetuate discrimination.

Addressing the ethical implications of bias in AI requires a multidisciplinary approach that involves data scientists, ethicists, policymakers, and other stakeholders. Developing guidelines, regulations, and frameworks for responsible AI usage can help mitigate biases, promote fairness, and ensure the ethical deployment of AI systems.

Robotics and Automation

Robotics and automation involve the use of machines and technology to automate processes, perform tasks, or interact with the physical world. Robotics and automation technologies can range from simple industrial robots to advanced humanoid robots and autonomous systems.

10.1 Robotic Process Automation (RPA)

Robotic Process Automation, or RPA, refers to the use of software robots or virtual assistants to automate repetitive and rule-based tasks in business processes. RPA technology enables machines to mimic human actions, such as data entry, data manipulation, and data extraction, in a more efficient and accurate manner.

RPA has applications in various industries, such as finance, healthcare, and customer service, where repetitive tasks can be automated to improve productivity, reduce errors, and free up human resources for more complex and strategic activities.

10.2 Industrial Automation

Industrial automation involves the use of machines, control systems, and information technologies to optimize and streamline manufacturing and production processes. Industrial automation technologies, such as robots, programmable logic controllers (PLCs), and machine vision systems, can perform tasks autonomously or in collaboration with human operators.

Industrial automation offers numerous benefits, including increased productivity, improved product quality, and enhanced worker safety. It enables companies to achieve higher efficiency and flexibility in their manufacturing processes and adapt to changing market demands.

10.3 Autonomous Robots

Autonomous robots are robotic systems that can perform tasks or make decisions without human intervention. These robots are equipped with sensors, actuators, and artificial intelligence algorithms that enable them to perceive and understand their environment, plan their actions, and execute complex tasks.

Autonomous robots have applications in various domains, such as agriculture, logistics, and healthcare. They can perform tasks ranging from autonomous navigation and object manipulation to environmental monitoring and search and rescue operations. The development of autonomous robots opens up new possibilities for automation and intelligent systems in diverse fields.

In conclusion, artificial intelligence encompasses a wide range of technologies and concepts that have the potential to revolutionize various sectors and industries. Understanding the different branches of AI, such as machine learning, deep learning, natural language processing, and computer vision, is crucial for individuals to stay informed about the latest advancements and applications. As AI continues to evolve, it is essential to address ethical considerations, such as bias and fairness, and harness the potential of AI for the benefit of society.