In today’s digital age, communication between humans and machines has become increasingly important. From voice assistants to chatbots, machines are constantly evolving to understand and respond to human language. This article explores the fascinating field of Natural Language Processing (NLP) and how it enables machines to comprehend and interpret human language, revolutionizing the way we interact with technology.

What is Natural Language Processing

Definition

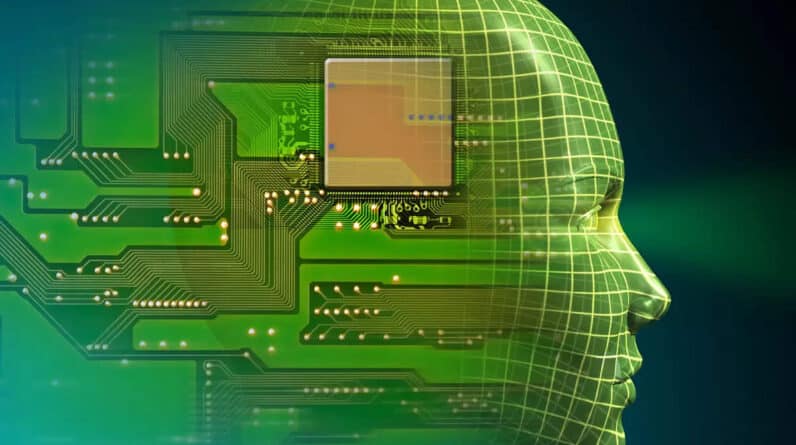

Natural Language Processing (NLP) is a field of artificial intelligence that focuses on the interaction between computers and humans using human language. It involves the development of algorithms and models that enable machines to understand and analyze natural language in various forms, such as text or speech. NLP aims to bridge the gap between human communication and machine comprehension, enabling computers to understand, interpret, and generate human language.

Importance

Natural Language Processing plays a crucial role in enabling machines to understand and process human language, making it a key technology in the advancement of artificial intelligence. By developing algorithms and models that can analyze and interpret textual or spoken language, NLP opens up a wide range of possibilities for automating tasks, improving customer experiences, extracting valuable information from large volumes of data, and enhancing decision-making processes.

Applications

The applications of Natural Language Processing are diverse and span across various industries. In the field of healthcare, NLP can be used for extracting relevant information from medical records or assisting in diagnosis and treatment plans. In the financial sector, NLP can be utilized for sentiment analysis of news articles or social media feeds to predict market trends. NLP is also integral to virtual assistants and chatbots, enabling them to understand and respond to user queries in a conversational manner. Additionally, NLP finds applications in machine translation, sentiment analysis, text classification, and speech recognition, among others.

How Natural Language Processing Works

Tokenization

Tokenization is the process of breaking down textual data into individual tokens, usually words or sentences. It involves separating the text into meaningful units to facilitate further analysis. Tokenization is a crucial step in NLP as it sets the foundation for tasks such as syntactic and semantic analysis. By breaking down text into tokens, NLP models can easily process and analyze the structure and meaning of the language.

Parsing

Parsing refers to the process of analyzing the grammatical structure of a sentence. It involves determining the relationships between words and their roles in a sentence. Parsing helps in understanding the syntactic structure of a sentence, which is essential for subsequent analysis and interpretation. There are different parsing techniques, such as constituency parsing and dependency parsing, that can be used depending on the requirements of the NLP task.

Semantic Analysis

Semantic analysis aims to understand the meaning of a sentence or a phrase. It goes beyond the syntactic structure and focuses on the interpretation of the language. Semantic analysis involves tasks such as word sense disambiguation, semantic role labeling, and entity linking. By analyzing the semantics of the text, NLP models can infer the relationships between words and derive meaningful insights from the language.

Named Entity Recognition

Named Entity Recognition (NER) is the task of identifying and classifying named entities in text. Named entities can include person names, organization names, dates, locations, and more. NER is important in various NLP applications, such as information extraction, question answering systems, and sentiment analysis. By recognizing and categorizing named entities, NLP models can extract valuable information from unstructured text.

Language Generation

Language Generation involves the generation of text or speech that is coherent, contextually relevant, and human-like. It is a challenging task in NLP as it requires the model to understand the context, the intended audience, and the desired outcome of the generated language. Language generation finds applications in chatbots, virtual assistants, text synthesis, and storytelling. It aims to create natural and engaging language output from machines.

Challenges in Natural Language Processing

Lack of Context

Understanding human language requires a deep understanding of the context in which the language is used. NLP models often struggle to interpret language accurately without sufficient context. For example, the same word can have different meanings depending on the context in which it is used. Addressing the challenge of context is crucial for improving the accuracy and reliability of natural language processing systems.

Ambiguity

Ambiguity is a common challenge in NLP, as language can be inherently ambiguous. Words and phrases can have multiple interpretations, making it difficult for machines to determine the intended meaning. Resolving ambiguity requires sophisticated algorithms and models that can analyze the context and the different possible interpretations of language. This challenge becomes particularly complex in languages with a high level of ambiguity, such as English.

Variability in Language Use

Language use varies across different regions, cultures, and social groups. This variability poses a challenge in NLP as models trained on one type of language may struggle to accurately interpret and analyze variations in language use. Different dialects, idiomatic expressions, and slang terms can complicate the understanding of language. Developing NLP models that can handle this variability is an ongoing challenge in the field.

Multilingualism

With globalization and the interconnectedness of the world, multilingualism has become increasingly important in NLP. Developing models that can understand and process multiple languages accurately is a significant challenge. Each language has its own unique characteristics and nuances, and accounting for these variations in NLP models requires extensive research and development. NLP systems that can support multilingual communication are highly desirable in the modern world.

Understanding Emotion and Tone

Human language is not only about conveying information but also about expressing emotions, attitudes, and tones. Understanding the emotional content and tone of language is a challenging task for NLP models. Sarcasm, irony, and subtle emotional cues can be difficult for machines to interpret accurately. Developing NLP systems that can recognize and understand the emotional and tonal aspects of language is an ongoing research area in NLP.

Methods and Techniques in Natural Language Processing

Rule-Based Systems

Rule-based systems rely on predefined rules or patterns to analyze and process language. These rules are created by experts in the field and are often based on linguistic principles. Rule-based systems can be effective in specific domains where the rules are well-defined and the language follows predictable patterns. However, they can be limited in handling language variations and complex linguistic phenomena.

Statistical Approaches

Statistical approaches in NLP involve using statistical models and algorithms to analyze and process language. These models are trained on large amounts of annotated data to learn patterns and make predictions. Statistical approaches have been successful in various NLP tasks, such as machine translation and sentiment analysis. They can handle language variations and adapt to different contexts. However, they may struggle with limited data or rare language phenomena.

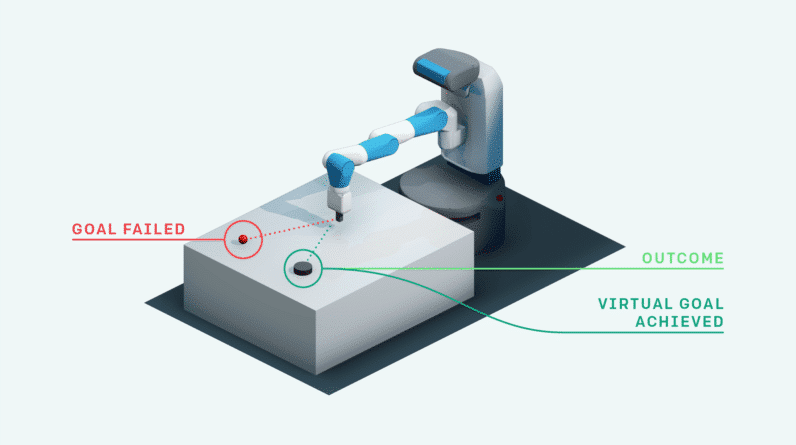

Machine Learning

Machine learning techniques, such as supervised learning and unsupervised learning, have been widely applied in NLP. Machine learning algorithms learn patterns and make predictions based on training data. They can be used for tasks such as text classification, named entity recognition, and sentiment analysis. Machine learning approaches can handle complex language phenomena and adapt to different contexts. However, they require large amounts of annotated data for training.

Deep Learning

Deep learning is a subfield of machine learning that focuses on modeling high-level abstraction and representation of data. Deep learning models, such as neural networks, have demonstrated remarkable performance in various NLP tasks. They can handle complex language structures and capture long-range dependencies. Deep learning approaches require a substantial amount of training data and computational resources. They also require careful optimization and fine-tuning of the models.

Hybrid Approaches

Hybrid approaches combine different methods and techniques from rule-based systems, statistical approaches, machine learning, and deep learning. These approaches leverage the strengths of each method to achieve better performance and accuracy in NLP tasks. Hybrid approaches can handle complex language phenomena, adapt to different contexts, and leverage expert knowledge. They often require careful design and optimization, as well as a large amount of training data for machine learning components.

Applications of Natural Language Processing

Text Classification

Text classification involves categorizing text into predefined classes or categories. NLP models can be trained to classify documents, emails, customer reviews, or social media posts into different categories based on their content. Text classification finds applications in email filtering, spam detection, sentiment analysis, and content categorization.

Sentiment Analysis

Sentiment analysis, also known as opinion mining, aims to determine the sentiment or emotional tone of a piece of text. NLP models can be trained to analyze customer reviews, social media feeds, or news articles and determine whether the sentiment expressed is positive, negative, or neutral. Sentiment analysis is useful in brand monitoring, customer feedback analysis, and market research.

Machine Translation

Machine translation involves automatically translating text from one language to another. NLP models can be trained on parallel corpora, which are texts in different languages that have been translated. Machine translation is useful in breaking down language barriers and facilitating communication across different cultures and languages.

Speech Recognition

Speech recognition involves converting spoken language into written text. NLP models can be trained on large datasets of transcribed speech to accurately transcribe spoken language into text. Speech recognition is used in applications such as transcription services, voice assistants, and voice-controlled devices.

Chatbots and Virtual Assistants

Chatbots and virtual assistants rely on NLP to understand and respond to user queries and engage in conversation. NLP models enable chatbots to interpret user intents, extract relevant information, and generate human-like responses. Chatbots and virtual assistants find applications in customer support, information retrieval, and task automation.

Information Retrieval

Information retrieval involves finding relevant information from a large collection of documents or sources. NLP models can be used to analyze queries and retrieve documents or sentences that are most relevant to the user’s information needs. Information retrieval is a key component of search engines, recommendation systems, and content management systems.

Benefits of Natural Language Processing

Improved Efficiency

Natural Language Processing can significantly improve efficiency by automating and streamlining various tasks. NLP models can process and analyze large volumes of text or speech data in a fraction of the time it would take for humans to do so. This enables organizations to extract valuable insights, make informed decisions, and enhance productivity.

Enhanced Customer Experience

NLP plays a crucial role in enhancing the customer experience by enabling intelligent interactions with customers. Chatbots and virtual assistants powered by NLP can understand and respond to customer queries in a conversational manner, providing timely and accurate assistance. This results in improved customer satisfaction and increased loyalty.

Automated Data Analysis

With the increasing availability of data, NLP can automate the analysis of large volumes of unstructured text data. By extracting key information, identifying patterns, and classifying data, NLP models can provide valuable insights for businesses and organizations. Automated data analysis enables organizations to make data-driven decisions and derive actionable intelligence.

Optimized Information Retrieval

NLP techniques can greatly improve the accuracy and efficiency of information retrieval systems. By understanding user queries and analyzing documents, NLP models can retrieve the most relevant information from vast amounts of data. This enables users to find information quickly and easily, saving time and effort.

Support for Decision Making

NLP can support decision-making processes by providing valuable insights and information. By analyzing and extracting relevant information from textual data, NLP models can assist in decision-making in various domains, such as healthcare, finance, and marketing. NLP-powered systems can provide recommendations, predictions, and insights that aid in making informed decisions.

Ethical Considerations in Natural Language Processing

Privacy Concerns

NLP raises privacy concerns as it involves the processing and analysis of personal data. Organizations must ensure that user data is handled securely and in compliance with privacy regulations. Transparency and user consent are crucial in NLP applications that involve the collection and use of personal information.

Bias and Fairness

NLP models can be biased due to the data they are trained on, which can reflect the biases present in society. It is important to ensure that NLP models are trained on diverse and representative data to mitigate bias. Regular monitoring and evaluation of NLP systems for fairness and bias are essential to prevent discrimination and ensure equitable outcomes.

Transparency

Transparency in NLP systems refers to the ability to understand and explain how the system arrives at its decisions or outputs. NLP models that are considered “black boxes” can raise concerns regarding reliability, accountability, and ethical implications. Research into interpretable and explainable NLP models is important to foster trust and enable users to understand how decisions are made.

Responsibility

Developers and practitioners of NLP have the responsibility to ensure that the technology is used ethically and responsibly. This includes considering the potential consequences and impact of NLP systems on individuals and society. NLP should be developed and deployed in a manner that respects ethical principles and human rights.

Future Directions in Natural Language Processing

Advancements in Artificial Intelligence

Advancements in artificial intelligence, particularly in deep learning, will continue to drive improvements in Natural Language Processing. Ongoing research in areas such as neural language models, transformer architectures, and self-supervised learning holds promise for further advancements in understanding and generating human language.

Conversational AI

Conversational AI aims to create intelligent and human-like conversational agents that can interact with users in a natural and engaging manner. Future developments in NLP will focus on improving dialogue systems, enabling machines to understand context, generate coherent and contextually relevant responses, and engage in more complex conversations.

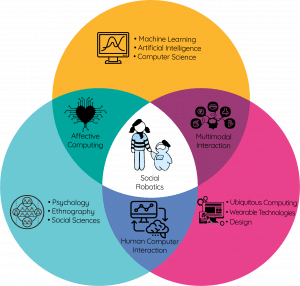

Multimodal Communication

Multimodal communication involves integrating different modes of communication, such as text, speech, images, and gestures, to enable more natural and comprehensive interactions. The future of NLP will see advancements in multimodal models that can understand and generate language in conjunction with other modalities, enabling richer and more interactive communication between humans and machines.

Improved Language Understanding

Advancements in NLP will continue to improve language understanding capabilities. Models will become better at understanding nuanced language, sarcasm, humor, and idiomatic expressions. In addition, NLP models will become more adept at understanding context and addressing the challenges of ambiguity and variability in language use.

Real-time Language Processing

Real-time language processing aims to enable NLP systems to process and understand language in real-time, without significant delays. This is particularly important in applications such as speech recognition, chatbots, and virtual assistants, where timely and responsive interactions are crucial. Future developments in NLP will focus on achieving real-time language processing capabilities.

Limitations of Natural Language Processing

Contextual Understanding

NLP models often struggle with understanding language in context. Understanding the intended meaning of a sentence or resolving ambiguities requires a deep contextual understanding that is still challenging for machines. Contextual understanding is crucial for tasks such as natural language understanding, machine translation, and text summarization.

Understanding Sarcasm and Humor

Sarcasm and humor can be particularly difficult for NLP models to interpret accurately. The nuanced and often contradictory nature of sarcasm and humor makes it challenging for machines to understand the intended meaning. Developing NLP models that can recognize and interpret sarcasm and humor is an ongoing area of research.

Idiomatic Expressions

Idiomatic expressions, such as “kick the bucket” to mean “to die,” pose a challenge for NLP models. These expressions have figurative meanings that cannot be deduced from the literal meanings of individual words. Understanding and interpreting idiomatic expressions requires a deep understanding of language nuances and cultural context.

Language Variations

NLP models trained on specific language variants may struggle to handle different variations and dialects. Language variations can include differences in spelling, grammar, vocabulary, and pronunciation. NLP models that can handle language variations and dialects can improve performance in diverse linguistic contexts.

Complex Domain Knowledge

NLP models often struggle with understanding and analyzing text in specialized domains that require domain-specific knowledge. Technical or domain-specific terms, jargon, and complex concepts can pose challenges for NLP models. Developing NLP systems that can accurately process and understand specialized domains is an ongoing challenge.

Conclusion

Natural Language Processing plays a vital role in bridging the gap between human language and machine comprehension. It enables machines to understand, analyze, and generate human language, opening up possibilities for automation, improved customer experiences, data analysis, and decision making. While NLP has made significant advancements, there are still challenges to overcome, such as context understanding, ambiguity, language variations, and idiomatic expressions. However, with ongoing research and development, NLP will continue to evolve and shape the future of human-computer interaction.