In today’s rapidly evolving world, where artificial intelligence (AI) is becoming increasingly prevalent, the need to preserve privacy has become a paramount concern. As AI continues to shape various aspects of our lives, from personalized ads to smart home devices, it brings along numerous challenges that threaten our privacy. This article explores the key challenges of preserving privacy in an AI-driven world and offers potential solutions to safeguard our personal information and maintain control over our digital lives.

Challenges in Preserving Privacy in an AI-driven World

Ethical concerns with AI technology

The rise of AI technology has brought about numerous ethical concerns, particularly in terms of privacy. AI systems have the ability to collect vast amounts of personal data, leading to potential misuse and breaches of privacy. For instance, the utilization of facial recognition technology raises questions about the extent of surveillance and the potential for violation of individual privacy rights. There is a need to address these ethical concerns and ensure that AI technology is designed and implemented in a way that respects and preserves privacy.

Security risks associated with data collection

The extensive collection of personal data by AI systems also poses security risks. As more data is accumulated, the risk of data breaches and unauthorized access increases. This not only compromises individuals’ privacy but also exposes them to identity theft and fraud. The challenge lies in implementing robust security measures to protect the data collected by AI systems and prevent unauthorized access.

Lack of transparency in AI algorithms

AI algorithms often operate in a black-box manner, making it difficult for individuals to understand how their personal data is being used and processed. The lack of transparency in AI algorithms raises concerns about the fairness and accountability of AI systems. Individuals should have the right to know and control how their data is being utilized, and there is a need to develop transparent AI algorithms that can provide explanations and justifications for their decisions.

Increasing potential for surveillance

The integration of AI technology into various aspects of everyday life, such as smart homes and wearable devices, increases the potential for surveillance. The constant monitoring and collection of personal data generated by these AI-driven devices raise concerns about the erosion of privacy. It is crucial to find ways to strike a balance between the benefits of AI technology and the right to privacy, ensuring that individuals’ personal data is not excessively collected and used for surveillance purposes without their knowledge or consent.

Legal and regulatory challenges

The rapid advancement of AI technology has outpaced the development of legal and regulatory frameworks to address the privacy concerns associated with its use. Existing laws and regulations may not adequately cover the unique challenges posed by AI-driven systems. Additionally, the complexity and global nature of AI technology make it difficult to enforce consistent privacy standards. There is a need for comprehensive and adaptive legal and regulatory frameworks that can keep pace with the evolving AI landscape and ensure privacy protection for individuals.

Importance of Privacy in an AI-driven World

Protecting sensitive personal information

Privacy is essential for protecting sensitive personal information. In an AI-driven world, where data is constantly collected and analyzed, individuals’ personal information, such as health records, financial data, and biometric information, may be at risk of unauthorized access or misuse. Preserving privacy ensures that individuals have control over who has access to their sensitive information, reducing the likelihood of identity theft, discrimination, and other harms.

Preserving individual autonomy and freedom

Privacy is closely tied to individual autonomy and freedom. It allows individuals to make choices without external interference, express themselves freely, and maintain a sense of personal identity. In an AI-driven world, where algorithms make decisions and recommendations based on personal data, preserving privacy becomes crucial in protecting individuals’ autonomy and preventing the manipulation or exploitation of personal information for external interests.

Building trust and user acceptance in AI systems

The success and adoption of AI systems depend on the trust and acceptance of users. Privacy plays a vital role in building this trust. When individuals feel that their privacy is respected and protected, they are more likely to engage with AI systems and share their data. On the other hand, if privacy concerns are not adequately addressed, individuals may be hesitant to embrace AI technology and may be reluctant to provide the data necessary for AI systems to function effectively. Therefore, privacy preservation is essential for fostering trust and user acceptance in AI-driven systems.

Current Approaches in Preserving Privacy

Anonymization and pseudonymization techniques

Anonymization and pseudonymization techniques are commonly employed to protect individuals’ privacy in AI systems. Anonymization involves removing or altering identifying information from datasets, while pseudonymization replaces identifying information with pseudonyms. These techniques help in reducing the risk of reidentification, but their effectiveness depends on the quality of data deidentification and the potential for reidentification attacks. Therefore, continuous evaluation and improvement of anonymization and pseudonymization techniques are necessary to preserve privacy effectively.

Consent and control mechanisms for data usage

Obtaining informed consent from individuals for data collection and usage is another crucial aspect of privacy preservation. Consent mechanisms should be transparent, easily understandable, and provide individuals with control over their data. However, challenges arise in obtaining meaningful consent, especially in complex AI systems with data practices that users may not fully comprehend. To address these challenges, consent mechanisms should evolve to be more user-centric, offering options for granular control over data usage and empowering individuals to make informed decisions.

Privacy-enhancing technologies (PETs)

Privacy-enhancing technologies (PETs) encompass a range of tools and approaches that aim to protect privacy in AI-driven systems. PETs include techniques such as encryption, secure multiparty computation, and differential privacy. These technologies help in preserving privacy by minimizing the exposure of personal data. However, the adoption and implementation of PETs face challenges such as performance trade-offs, compatibility issues, and the need for user education. Continued research and development in PETs are essential for overcoming these limitations and advancing privacy preservation in AI systems.

Data minimization strategies

Data minimization strategies involve limiting the collection, use, and retention of personal data. By minimizing the amount of personal data stored and processed, the risk of privacy breaches and unauthorized access is reduced. Organizations can implement techniques such as data anonymization, aggregation, and deidentification to minimize the collection of personally identifiable information. However, striking the right balance between data minimization and the functional requirements of AI systems can be challenging. Careful consideration of the specific data needs and the associated privacy risks is necessary for effective data minimization.

Limitations of Current Approaches

Effectiveness of anonymization/pseudonymization techniques

While anonymization and pseudonymization techniques are widely used, their effectiveness in preserving privacy is not foolproof. Studies have shown that it is often possible to reidentify individuals from supposedly anonymized datasets, especially when combined with external information. The risk of reidentification raises concerns about the long-term privacy implications and highlights the need for continuous improvement and evaluation of these techniques.

Challenges in obtaining informed consent

Despite the importance of informed consent in preserving privacy, obtaining meaningful consent can be challenging in AI-driven systems. The complexity of AI algorithms and data practices may make it difficult for individuals to fully understand how their data will be used. Moreover, consent mechanisms are often presented in lengthy and complex legal documents, leading to “consent fatigue” and a lack of genuine understanding. Therefore, there is a need for user-centric consent mechanisms that are clear, concise, and capable of providing individuals with a genuine understanding of their choices.

Unresolved issues with PETs

While PETs have the potential to enhance privacy in AI systems, they have their limitations and unresolved issues. For example, the application of encryption techniques can introduce computational overhead, affecting the performance of AI models. There is also a need to address compatibility issues and the ability to balance privacy-enhancing measures with functionality and utility. Additionally, PETs require user understanding and awareness to be effectively implemented, necessitating education and training initiatives.

Difficulty in achieving data minimization

Data minimization is a key strategy for privacy preservation, but its implementation can be challenging. Organizations often face pressures to collect and retain large datasets for various purposes, such as improving AI algorithms and providing personalized services. Striking a balance between data minimization and the optimal functioning of AI systems can be a complex task. There is a need for organizations to carefully evaluate their data needs and adopt strategies that prioritize privacy without compromising the functionality and usefulness of AI systems.

Emerging Solutions for Preserving Privacy

Differential privacy

Differential privacy is an emerging technique that provides strong privacy guarantees for individuals while allowing useful insights to be derived from data. It adds noise or randomness to the data before analysis, ensuring that individual-level information remains protected. Differential privacy has gained attention as a potential solution for privacy preservation in AI-driven systems. However, challenges remain in finding the right balance between privacy protection and the accuracy of AI models.

Homomorphic encryption

Homomorphic encryption allows computations to be performed on encrypted data, preserving privacy while enabling data analysis. It allows AI models to operate on encrypted data without the need for decryption, thereby protecting sensitive information. Homomorphic encryption shows promise in privacy-preserving AI systems, but it faces challenges related to computational efficiency and scalability. Continued research is needed to improve the performance and practicality of homomorphic encryption techniques.

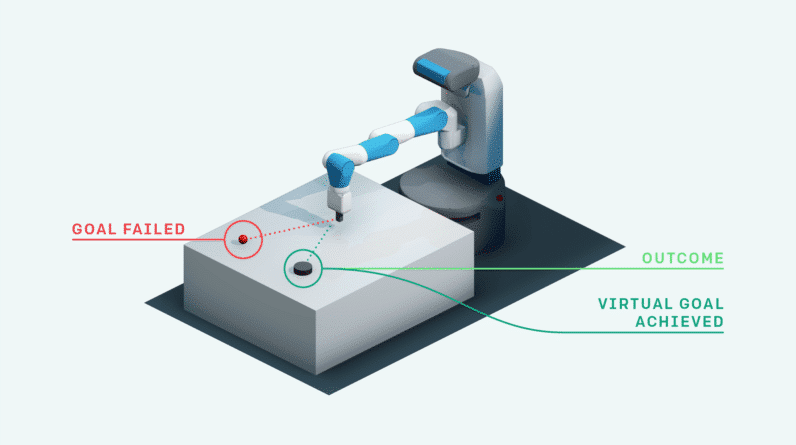

Federated learning

Federated learning is a distributed learning approach that enables AI models to be trained on decentralized data without the need for data sharing. Instead of transferring data to a central server, federated learning allows localized models to be trained on device-specific data and aggregate the learnings. This technique reduces the dependence on data aggregation and minimizes privacy risks associated with data sharing. However, challenges exist in ensuring the security and integrity of federated learning processes, as well as addressing issues related to data heterogeneity and communication costs.

Blockchain-based solutions

Blockchain technology has the potential to enhance privacy in AI systems by providing decentralized and transparent data management. By utilizing distributed ledger technology, blockchain-based solutions can secure personal data and enable users to have control over their data. Blockchain offers features such as immutability, transparency, and cryptographic security, which can address privacy concerns in AI-driven systems. However, challenges such as scalability, energy consumption, and regulatory considerations need to be addressed for the widespread adoption of blockchain-based solutions.

Benefits and Challenges of Emerging Solutions

Enhanced privacy guarantees

Emerging solutions, such as differential privacy and homomorphic encryption, offer enhanced privacy guarantees by protecting personal data while enabling accurate analysis. These techniques ensure that individual-level information remains private, even when AI models are trained or utilized. By adopting emerging privacy-preserving solutions, individuals can have confidence that their sensitive information is protected, fostering trust and user acceptance in AI-driven systems.

Balancing privacy and utility

One of the challenges of emerging solutions is striking a balance between privacy preservation and utility. While techniques like differential privacy and homomorphic encryption enhance privacy, they may introduce noise or computational overhead, potentially affecting the accuracy and functionality of AI models. Finding the right trade-off between privacy and utility is essential to ensure that AI systems remain effective while preserving privacy.

Complexity and computational overhead

Emerging privacy-preserving solutions often come with added complexity and computational overhead. Techniques like homomorphic encryption and differential privacy require additional computational resources and may affect the performance of AI models. Balancing the demands of computational efficiency with the need for privacy protection is a significant challenge that researchers and practitioners need to address to make these solutions more practical and accessible.

Interoperability and scalability challenges

As emerging privacy-preserving solutions continue to develop, interoperability and scalability challenges will arise. Effective integration and interoperability of different privacy-enhancing techniques are necessary to ensure the compatibility of AI systems. Additionally, scalability is a crucial consideration to accommodate the large-scale deployment of AI-driven systems while preserving privacy. Addressing interoperability and scalability challenges will enable the widespread adoption of emerging solutions for privacy preservation.

Collaboration and Regulation for Privacy Preservation

Public-private partnerships

Preserving privacy in an AI-driven world requires collaboration between public and private entities. Public-private partnerships can facilitate the sharing of knowledge, expertise, and resources to develop effective privacy-preserving frameworks. By working together, governments, industries, and academia can collectively address the challenges associated with privacy preservation in AI-driven systems and foster innovation in privacy-enhancing technologies.

Industry-wide standards and guidelines

Establishing industry-wide standards and guidelines is crucial for ensuring consistent privacy protection in AI systems. These standards can define best practices for data handling, consent mechanisms, and the implementation of privacy-enhancing technologies. By adhering to industry-wide standards, organizations can demonstrate their commitment to privacy preservation and build trust with users. Additionally, standards can provide a framework for evaluating the privacy features of AI systems and promoting accountability among stakeholders.

Regulatory frameworks and compliance

Legal and regulatory frameworks play a crucial role in privacy preservation. Governments need to develop comprehensive and adaptive regulations that address the unique challenges posed by AI-driven systems. These frameworks should enforce data protection, define privacy rights, and establish accountability mechanisms. Compliance with regulatory requirements will help ensure that privacy is preserved in AI systems and provide individuals with recourse in case of privacy breaches.

Ethical Considerations in Privacy Preservation

Fairness and bias in AI systems

Privacy preservation should go hand in hand with the consideration of fairness and bias in AI systems. The collection and processing of personal data can result in the amplification and perpetuation of biases, leading to discriminatory outcomes. Ethical considerations should be given to ensure that privacy-preserving measures do not result in unequal treatment or reinforce existing biases. Addressing fairness and bias in AI systems is crucial for promoting equitable and ethical privacy preservation.

Responsible data handling practices

Responsible data handling practices are essential in privacy preservation. Organizations should adhere to principles of data minimization, purpose limitation, and transparency in their data handling processes. They should only collect and retain data that is necessary for the intended purpose and inform individuals about how their data will be used. Furthermore, organizations should implement strong security measures to protect personal data from unauthorized access or breaches. Responsible data handling practices are fundamental in ensuring privacy preservation and building trust with individuals.

Ethical decision-making in AI development

The development and deployment of AI systems should be guided by ethical decision-making principles. Ethical considerations should be embedded in the design and development process to ensure that privacy is prioritized. Decision-makers should consider the potential risks and impacts of AI systems on privacy and take measures to mitigate them. Incorporating ethical frameworks and conducting comprehensive ethical assessments can help in identifying and addressing privacy concerns in AI-driven systems.

Educating and Empowering Individuals in Privacy Protection

Raising awareness about privacy risks

Educating individuals about the risks associated with the collection and use of personal data is crucial for privacy protection. Public awareness programs can inform individuals about the potential privacy implications of AI technology and empower them to make informed decisions. By understanding the risks and their rights, individuals can take an active role in protecting their privacy and demanding accountability from organizations and policymakers.

Providing individuals with tools for privacy control

Individuals should be provided with tools and technologies that empower them to have control over their privacy. User-centric privacy settings and consent mechanisms can give individuals the ability to manage their data and make choices about its usage. User-friendly interfaces and educational resources can help individuals navigate privacy controls effectively. By equipping individuals with the right tools, they can actively participate in privacy protection in an AI-driven world.

Promoting digital literacy and data literacy

Digital literacy and data literacy are essential skills for individuals in an AI-driven world. Promoting digital literacy helps individuals understand the technologies they use, the risks involved, and the privacy implications. Data literacy enables individuals to understand and evaluate how their personal data is collected, processed, and used by AI systems. By promoting these literacies, individuals can make informed decisions, protect their privacy, and actively engage with AI technology.

Conclusion

Preserving privacy in an AI-driven world poses unique challenges that require comprehensive and collaborative solutions. Ethical concerns, security risks, transparency issues, and legal challenges all contribute to the complexity of privacy preservation. However, the importance of privacy cannot be overstated. It protects sensitive personal information, preserves individual autonomy, and builds trust and user acceptance in AI systems.

Current approaches, such as anonymization, consent mechanisms, PETs, and data minimization, provide a foundation for privacy preservation. However, they have limitations that need to be addressed, such as the effectiveness of anonymization techniques and challenges in obtaining informed consent.

Emerging solutions, including differential privacy, homomorphic encryption, federated learning, and blockchain-based solutions, offer promising avenues for privacy preservation. They provide enhanced privacy guarantees and address the challenges faced by current approaches. However, implementation and scalability challenges must be overcome to realize their full potential.

Collaboration between public and private entities, the establishment of industry-wide standards, and the development of regulatory frameworks are essential for privacy preservation. Ethical considerations and responsible data handling practices should be integrated into the development and deployment of AI systems. Educating and empowering individuals in privacy protection through awareness programs, privacy control tools, and digital and data literacy is crucial.

Balancing AI advancements with privacy concerns is a complex task that requires continuous effort, innovation, and collaboration. By working together, we can create a privacy-preserving AI-driven world that maximizes the benefits of AI while respecting the rights and privacy of individuals.