In the realm of politics and governance, the emergence of Artificial Intelligence (AI) has sparked a myriad of implications and ethical considerations. This article dives into the fascinating world where AI intersects with politics, exploring the potential benefits and thorny challenges that lie ahead. From decision-making algorithms to surveillance technologies, join us as we navigate the complex landscape of AI in politics, shedding light on the opportunities and dilemmas that this cutting-edge technology presents.

Impact of AI on Politics and Governance

In recent years, the field of politics and governance has witnessed the rapid integration of artificial intelligence (AI) technologies. AI has influenced political campaigns, policy-making processes, decision-making procedures, and political communication. This article aims to explore the various impacts of AI in these areas, highlighting both the benefits and challenges that arise. Additionally, ethical considerations surrounding AI-powered politics will be discussed, along with strategies to regulate and mitigate the potential negative consequences. Ultimately, the goal is to build public acceptance of AI in politics while ensuring fairness, transparency, and accountability.

AI in Political Campaigns

AI has revolutionized political campaigns by providing innovative tools for gathering and analyzing voter data. With AI, political parties can harness the power of big data to understand citizens’ preferences, concerns, and voting behaviors more accurately. This allows campaigners to tailor their messages, strategies, and outreach efforts to specific demographics, increasing the efficiency and effectiveness of their campaigns. By leveraging AI technologies, campaigns can also optimize resource allocation, identify swing districts, and allocate resources strategically.

AI in Policy-Making

AI has the potential to reshape policy-making processes by providing valuable insights and recommendations to policymakers. Through machine learning algorithms, AI systems can analyze massive amounts of data, identify patterns, and make predictions about the impact of different policy options. This can help policymakers explore a broader range of scenarios, evaluate the potential consequences of their decisions, and devise evidence-based policies that are more likely to achieve desired objectives. By integrating AI into policy-making, governments can achieve improved efficiency, effectiveness, and outcomes.

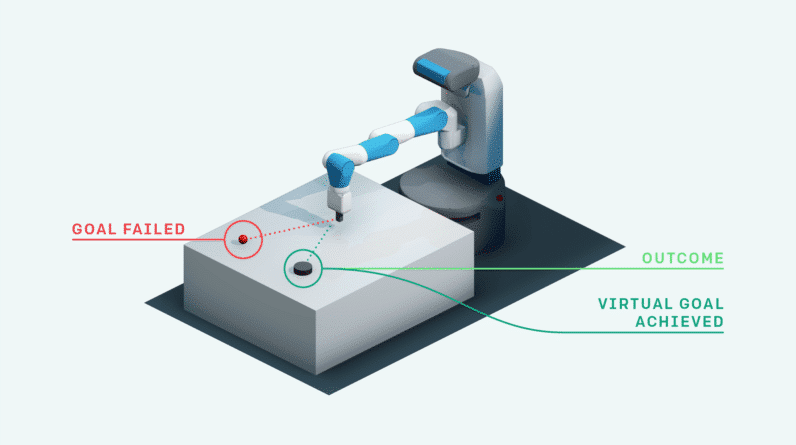

AI in Decision-Making Processes

AI technologies can enhance decision-making processes in politics and governance by automating repetitive tasks, reducing human bias, and increasing the speed and accuracy of decision-making. Through natural language processing and sentiment analysis, AI systems can analyze public opinion on various issues, enabling policymakers to gauge public sentiment and make more informed decisions. Moreover, AI can assist in identifying potential risks and opportunities, providing decision-makers with comprehensive and timely information to shape their strategies.

AI in Political Communication

AI tools have transformed political communication channels and strategies. Chatbots, for instance, provide automated support for answering citizens’ queries, disseminating information, and engaging in dialogue. Natural language processing enables AI to analyze public sentiment on social media platforms, helping politicians and policymakers gauge public opinion and understand citizens’ concerns. AI-powered sentiment analysis can also assist in detecting and addressing emerging issues before they escalate. Additionally, AI algorithms can help identify misinformation and combat the spread of fake news, improving the quality and reliability of political communication.

Benefits of AI in Politics and Governance

Improved Efficiency and Accuracy

One of the key benefits of AI in politics and governance is improved efficiency and accuracy in various processes. AI automates time-consuming tasks such as data analysis, freeing up human resources to focus on strategic and creative endeavors. By streamlining routine tasks, AI reduces human error and enhances accuracy, leading to more precise decision-making and policy implementation. Moreover, AI systems can process and analyze vast amounts of data quickly, providing policymakers with comprehensive insights that would otherwise be difficult to obtain through traditional methods.

Enhanced Data Analysis and Insights

AI’s ability to analyze vast amounts of data rapidly provides policymakers with valuable insights and predictions. By utilizing machine learning algorithms, AI systems can identify patterns, correlations, and trends in data, enabling policymakers to make data-informed decisions. AI’s data analysis capabilities can provide policymakers with a more comprehensive understanding of complex issues, uncover hidden insights, and help predict the potential impact of different policy options. This enhances their ability to develop evidence-based policies that address societal challenges more effectively.

Streamlined Policy Implementation

AI can streamline policy implementation by automating various administrative processes and reducing bureaucratic burdens. For example, AI-powered systems can assist in processing and verifying documents, handling citizen inquiries, and managing digital interactions with the government. By automating routine tasks, AI enables governments to allocate resources more efficiently, reduce administrative costs, and enhance service delivery to citizens. This not only improves the overall efficiency of governance but also enhances citizen satisfaction by providing quicker and more accurate responses to their needs.

Better Citizen Engagement and Participation

AI technology can empower citizens to engage more actively in the political process, fostering a more inclusive democracy. AI-powered platforms and tools can facilitate citizen participation by providing accessible channels for citizens to voice their opinions, share feedback, and contribute to policy discussions. For instance, online platforms and chatbots can enable citizens to interact with policymakers, seek clarifications, and provide input on various policy matters. By leveraging AI, governments can bridge the gap between citizens and policymakers, fostering a culture of transparency, collaboration, and trust.

Challenges of AI in Politics and Governance

Ethical and Privacy Concerns

The increased reliance on AI in politics and governance raises significant ethical and privacy concerns. AI systems are created by humans and can thus inherit biases present in the data used to train them. This raises concerns about potential discrimination and unfair treatment of certain individuals or groups. Moreover, the collection and analysis of vast amounts of personal data by AI systems can raise privacy concerns, especially when proper safeguards are not in place to protect citizens’ sensitive information. Addressing these ethical and privacy concerns is crucial to ensure the responsible and equitable use of AI technologies.

Bias and Discrimination

Bias in AI systems can perpetuate existing inequalities and deepen societal divisions. AI algorithms trained on biased data can produce biased outcomes, favoring certain groups or reinforcing discriminatory practices. For example, when AI systems are used in candidate selection processes, they may inadvertently perpetuate gender or racial bias. Similarly, AI algorithms used for crime prediction may disproportionately target specific communities, leading to unjust outcomes. It is essential to detect and mitigate biases in AI systems to ensure that their use in politics and governance is fair, unbiased, and inclusive.

Reliability and Transparency of AI Systems

The reliability and transparency of AI systems used in politics and governance are concerns that must be addressed to build trust and acceptance. AI algorithms are complex, and their decision-making processes are often perceived as a “black box.” This lack of transparency raises questions about the accountability of AI systems and the potential for manipulation or bias. It is crucial to develop methods and frameworks that enable the explainability and transparency of AI algorithms, allowing policymakers and citizens to understand the rationale behind AI-generated decisions.

Potential Job Displacement

The integration of AI in politics and governance raises concerns about potential job displacement. AI technologies automate tasks that were previously performed by humans, leading to the fear of unemployment or the devaluation of certain job roles. However, it is important to recognize that AI can also create new employment opportunities. As AI takes over routine tasks, humans can shift their focus to more complex and creative endeavors. To harness the benefits of AI while minimizing the negative impacts on employment, reskilling and upskilling programs should be implemented to equip individuals with the skills needed to thrive in an AI-driven society.

Ethical Considerations in AI-Powered Politics

Fairness and Equality

Ensuring fairness and equality is essential when deploying AI in politics and governance. Steps must be taken to eliminate biases in datasets used for training AI systems and to ensure that algorithmic decision-making is free from discrimination. This requires careful data collection, monitoring, and auditing processes. Transparency in AI systems’ decision-making processes is equally crucial to prevent unintended biases and discriminatory outcomes. Governments and policymakers should establish guidelines and standards that prioritize fairness and equality throughout the development and deployment of AI systems.

Transparency and Explainability

The transparency and explainability of AI systems are paramount to building trust and accountability. Citizens and policymakers must be able to understand how AI algorithms arrive at their decisions and recommendations. Explainable AI methodologies, such as interpretable models and rule-based systems, can provide insights into the decision-making processes of AI systems. Openness regarding the data sources, algorithms used, and any proprietary components of AI systems will allow for external scrutiny and help prevent potential biases or ethical violations.

Accountability and Responsibility

When AI systems are involved in decision-making processes, clear lines of accountability and responsibility should be established. Governments and policymakers need to define who is accountable for the outcomes of AI systems and establish legal frameworks that hold individuals or entities accountable for any biases, discriminatory practices, or unethical behaviors related to AI use. Adequate mechanisms should also be in place to address any grievances or issues arising from the use of AI technologies in politics and governance.

Data Privacy and Security

Respecting data privacy and ensuring data security are critical considerations when employing AI in politics and governance. Governments must develop and enforce robust data protection regulations to safeguard citizens’ personal information from unauthorized access, breaches, or misuse. Transparent policies should be in place to govern the collection, storage, and sharing of data within AI systems. Additionally, measures such as data anonymization, stringent access controls, and regular audits should be implemented to protect the privacy and security of citizens’ data.

Regulating AI in Politics and Governance

Ethics Guidelines for AI Use in Politics

Developing ethics guidelines specifically for AI use in politics and governance is crucial to ensure responsible and ethical practices. These guidelines can address considerations such as fairness, transparency, accountability, privacy, and the prevention of biased outcomes. Governments and policymakers should collaborate with AI experts, ethicists, and relevant stakeholders to define and implement these guidelines. Regular reviews and updates of the guidelines should be conducted to keep up with technological advancements and societal needs.

Establishing Regulatory Frameworks

To enable the responsible and ethical use of AI in politics and governance, regulatory frameworks should be established. These frameworks should outline the legal requirements, responsibilities, and standards for deploying AI technologies. Governments and regulatory bodies should establish clear guidelines on data collection, processing, and sharing, ensuring compliance with data protection regulations. Furthermore, frameworks should address the testing, auditing, and certification of AI systems to ensure their reliability, accuracy, and compliance with ethical standards.

Creating Audit and Oversight Mechanisms

Robust audit and oversight mechanisms are vital to ensure the responsible and accountable use of AI in politics and governance. Independent bodies should be established to audit AI systems, evaluate their adherence to established guidelines and regulatory frameworks, and ensure transparency and fairness. These bodies should have the authority to investigate any grievances, mitigate biases, and address concerns related to AI use. Regular reporting and public disclosure of AI systems’ performance and outcomes should also be mandated to enhance accountability and transparency.

Collaboration between Policymakers and AI Experts

To effectively regulate AI in politics and governance, collaboration between policymakers and AI experts is essential. Policymakers need to develop a deep understanding of AI technologies, their benefits, and their limitations. Collaboration with AI experts can help ensure that regulations and guidelines are based on a thorough understanding of the technology and its potential societal impact. Regular consultations, conferences, and collaborative initiatives should be established to facilitate mutual learning, facilitate knowledge sharing, and promote informed policy decisions.

Mitigating Bias and Discrimination

Bias Detection and Elimination

Addressing and mitigating bias in AI systems is crucial to ensure fairness and equality. Bias detection methods should be integrated into the development process, allowing AI developers to identify and rectify biases in training data and algorithms. Additionally, diverse and representative training datasets should be used to reduce biases that can result from underrepresentation. Regular monitoring and evaluation of AI systems for biased outcomes should be conducted to enable timely interventions and adjustments, promoting fair and unbiased decision-making.

Diverse and Inclusive AI Development Teams

To minimize bias and discrimination in AI systems, it is important to have diverse and inclusive AI development teams. A diverse team can bring multiple perspectives and experiences, reducing the risk of biased design and decision-making. Developers from different backgrounds can identify and challenge biases that might otherwise be overlooked. Collaborating with individuals from various disciplines, such as social sciences, ethics, and human rights, can further enhance the inclusivity and fairness of AI technologies.

Continuous Monitoring and Evaluation

Regular monitoring and evaluation of AI systems are crucial to assess their performance, identify biases, and ensure overall fairness. Periodic reviews should be conducted to spot unintended biases, discriminatory practices, or ethical violations that might arise as AI systems evolve. Feedback loops and transparency mechanisms should be established to allow individuals and communities affected by AI technologies to provide input and voice their concerns. Continuous monitoring and evaluation enable timely corrective actions and promote the responsible and accountable use of AI in politics and governance.

Engagement with Marginalized Communities

Engagement with marginalized communities should be prioritized to ensure that their voices are heard and taken into account in AI-powered politics. These communities are often disproportionately impacted by biases in AI systems, making it crucial for policymakers and AI developers to actively seek their perspectives. Public consultation, education programs, and dedicated outreach efforts should be initiated to enable communities to understand how AI technologies are used and to involve them in the decision-making processes. By engaging with marginalized communities, policymakers and AI developers can gain a more comprehensive understanding of the potential risks and benefits of AI deployment.

Ensuring Transparency and Trust

Explainability of AI Algorithms

Explainability is essential to ensure transparency and trust in AI-powered politics. AI algorithms should be designed in a manner that allows for their decision-making to be understandable by policymakers and citizens alike. This requires the use of interpretable models and rule-based systems that provide insights into the factors influencing AI-generated decisions. By enabling transparency in the decision-making processes, AI systems can be held accountable, and potential biases or errors can be identified and addressed.

Public Accessibility to AI-Related Information

To build trust and foster transparency, AI-related information should be made publicly accessible. Policies should mandate the provision of information on the data sources, algorithms, and decision-making processes of AI systems employed in politics and governance. Making this information easily available ensures that citizens, civil society, and experts can scrutinize and assess the fairness, credibility, and ethical implications of AI technologies. Public accessibility to AI-related information encourages accountability, encourages public awareness, and allows for independent checks and audits.

Independent Audits of AI Systems

Independent audits of AI systems should be conducted regularly to assess their performance, fairness, and compliance with ethical standards. These audits can be carried out by independent bodies and experts, ensuring a comprehensive evaluation of AI systems’ impact on politics and governance. The audits should assess the adherence to established regulations, guidelines, and policies, as well as identify potential biases or ethical concerns. Independent audits play a critical role in maintaining transparency, accountability, and public trust in AI-powered politics.

Open-Source AI Solutions

Promoting open-source AI solutions can contribute to transparency, collaboration, and inclusivity in politics and governance. Open-source AI algorithms and tools allow for the examination and improvement of AI systems by a diverse community of developers, researchers, and experts. This collaborative approach fosters innovation, enables independent audits, and encourages the identification and mitigation of biases and ethical concerns. The sharing of open-source AI solutions enhances the transparency, reliability, and trustworthiness of AI technologies used in politics and governance.

Political Communication and AI

Automated Content Moderation

AI can facilitate automated content moderation in political communication, allowing for efficient monitoring and filtering of online content. AI algorithms can analyze large volumes of data, flagging and filtering out inappropriate or harmful content. By automating content moderation, AI can help maintain a respectful and civil environment for political discourse, reducing the risks of misinformation, hate speech, and abusive behaviors. However, it is important to strike a balance between content moderation and the protection of free speech, ensuring that AI systems do not inadvertently suppress legitimate political expression.

AI-Based Misinformation Detection

The spread of misinformation poses a significant challenge in political communication. AI can assist in detecting and combating misinformation, analyzing online content and identifying potentially false or misleading information. Natural language processing and machine learning techniques allow AI systems to recognize patterns indicative of misinformation and prioritize fact-checking efforts. By utilizing AI to detect and classify misinformation, policymakers can take proactive measures to address false narratives, protect public discourse, and maintain the integrity of political communication.

Enhancing Political Discourse and Civic Participation

AI tools have the potential to enhance political discourse and civic participation by providing accessible and inclusive platforms for engagement. Chatbots and virtual assistants can assist citizens in navigating complex policy issues, answering their questions, and guiding them through political processes. AI-powered platforms can also serve as spaces for citizens to express their opinions, engage in dialogue, and contribute to the formulation of policies. By leveraging AI, political discourse can become more inclusive, informed, and accessible to a broader range of citizens.

Protecting against AI-Generated Deepfakes

Deepfake technology, which uses AI algorithms to manipulate and generate realistic but fake audio or visual content, poses a significant threat to political communication. AI can play a crucial role in identifying and mitigating the risks associated with deepfakes. Through advanced pattern recognition algorithms, AI can analyze audio and visual content for signs of manipulation, helping to identify and debunk deepfakes. Additionally, AI can assist in developing methods to authenticate and verify the authenticity of multimedia content, safeguarding the integrity of political communication.

Building Public Acceptance of AI in Politics

Education and Awareness Campaigns

Building public acceptance of AI in politics requires extensive education and awareness campaigns. Citizens need to understand how AI technologies are used, their benefits, and their potential risks. Governments and stakeholders should invest in public outreach initiatives, disseminating information, and promoting dialogue on AI-powered politics. Educational programs, workshops, and public consultations can empower citizens to make informed judgments, participate actively in discussions, and provide input into the development and deployment of AI technologies.

Involving Citizens in Decision-Making

Involving citizens in decision-making processes is vital to ensuring their trust and acceptance of AI in politics and governance. Governments should prioritize citizen engagement, actively seeking public input on AI-related policies and practices. Participatory mechanisms, such as citizen assemblies, online platforms for feedback, and public consultations, can enable citizens to contribute to the decision-making processes and hold policymakers accountable. By involving citizens in shaping AI-powered politics, governments can build a sense of ownership, transparency, and legitimacy.

Promoting Trust in AI Systems

Promoting trust in AI systems is crucial to overcome public skepticism and ensure the acceptance of AI in politics and governance. Governments should prioritize the establishment of robust regulatory frameworks, ethical guidelines, and accountability mechanisms that safeguard the responsible use of AI. Transparent communication about AI systems’ benefits, limitations, and potential risks is essential to build trust. Additionally, engaging in open dialogue with stakeholders, civil society, and citizens can address concerns, dispel misconceptions, and foster a sense of shared responsibility.

Demonstrating Tangible Benefits for Society

To build public acceptance, AI systems in politics and governance should demonstrate clear and tangible benefits for society. Governments should focus on the implementation of AI technologies that solve pressing societal challenges and improve public services. Highlighting success stories and showcasing how AI has positively impacted governance, efficiency, and citizen engagement can help change public perceptions. Transparent reporting and evaluation of AI system performance can further demonstrate the benefits and ensure that the technology is directed towards the public good.

Conclusion

The integration of AI technologies in politics and governance offers tremendous potential to revolutionize political campaigns, policy-making processes, decision-making procedures, and political communication. However, to fully harness the benefits of AI, it is crucial to address the associated challenges and ethical considerations. Governments, policymakers, and AI experts must work together to regulate the use of AI, mitigate biases and discrimination, ensure transparency and trust, and build public acceptance through education, citizen involvement, and responsible practices. By navigating these implications and ethical considerations, we can create a future where AI supports democratic processes, enhances governance, and addresses societal challenges for the betterment of all.