In today’s digital age, artificial intelligence (AI) plays a significant role in various aspects of our lives. From personalized recommendations to automated decision-making, AI promises efficiency and convenience. However, as the reliance on AI grows, so does the concern over bias in these systems. Building fair and neutral AI systems poses a challenge as inherent biases can seep into the algorithms, ultimately impacting the outcomes. This article explores the complexity of tackling bias in AI and the importance of striving towards fairness and neutrality in these technologies.

Understanding Bias in AI

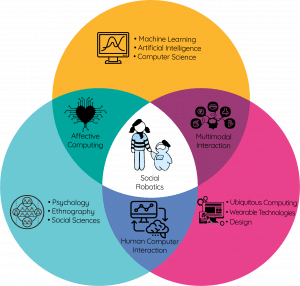

Artificial Intelligence (AI) has rapidly become an integral part of our lives, impacting various sectors such as healthcare, finance, and even our daily interactions. While AI brings numerous benefits, it also brings the challenge of bias. Bias in AI refers to the tendency of machine learning algorithms to exhibit prejudice or favoritism towards certain groups or individuals, resulting in unfair outcomes. To build fair and neutral AI systems, it is crucial to understand the different types of bias and their impact.

Defining Bias in AI

Bias in AI can be defined as the systematic and consistent deviation from a desired outcome, often influenced by historical and cultural disparities. These biases can emerge as a result of biased data, algorithmic decisions, or unintentional human influence during AI development. It is important to recognize that bias in AI is not inherently malicious, but rather a reflection of the biases present in the data and algorithms used.

Types of Bias in AI

There are several types of bias that can manifest in AI systems. One common type is selection bias, where the training data used for AI models is not representative of the real-world population. This can lead to skewed predictions and inaccurate results. Algorithmic bias refers to biases inherent in the algorithms themselves, which can be influenced by the preferences and values of the developers or by the data used for training. Another form of bias is unintended bias, which occurs when AI systems make discriminatory decisions based on attributes that are unrelated to the outcome being predicted.

Impact of Bias in AI

The impact of bias in AI can be far-reaching, affecting individuals, communities, and society as a whole. Biased AI systems can perpetuate existing social inequalities, reinforcing discriminatory practices and excluding marginalized groups. For example, biased algorithms in the criminal justice system can result in disproportionate arrest rates among certain communities. Moreover, biased AI systems can hinder social progress, limit opportunities, and lead to biased decision-making processes that lack transparency and accountability. It is therefore essential to address bias in AI to ensure fairness and neutrality in its application.

The Need for Fair and Neutral Systems

Recognizing the ethical implications of biased AI is crucial in order to foster fairness and impartiality in AI systems.

Ethical Implications of Biased AI

The ethical implications of biased AI go beyond technical concerns. Biased AI can perpetuate stereotypes, reinforce discrimination, and violate principles of fairness and equality. For instance, if an AI-powered recruitment system consistently favors candidates from a particular gender or ethnicity, it can have significant social and economic consequences. Biased AI can also infringe on individuals’ privacy and autonomy, as biased algorithms may make decisions without their knowledge or consent. It is essential to address these ethical concerns and develop AI systems that are fair, neutral, and align with societal values.

Importance of Fairness and Neutrality in AI

Fairness and neutrality are fundamental principles that underpin a just society. Similarly, these principles should guide the development and deployment of AI systems. Fair and neutral AI systems can ensure equal opportunities, reduce biases and injustices, and minimize the impact of historical prejudices. By striving for fairness and neutrality, AI can enhance decision-making processes, improve resource allocation, and promote inclusivity. It is essential to prioritize fairness and neutrality in AI to ensure the well-being and dignity of all individuals.

Challenges in Building Fair and Neutral Systems

Building fair and neutral AI systems poses several challenges that need to be addressed comprehensively. These challenges can arise from biased data, algorithmic decisions, unintentional biases, and the subjectivity inherent in defining fairness.

Data Bias

Data bias is one of the primary challenges in building fair and neutral AI systems. If the training data used to develop AI models is biased or lacks diversity, it can lead to biased predictions and reinforce existing inequalities. Biased training data may reflect historical prejudices or systemic biases present in society. Addressing data bias requires collecting diverse and representative data, carefully considering data collection and sampling techniques, and conducting ongoing audits to ensure fairness in the datasets used.

Algorithm Bias

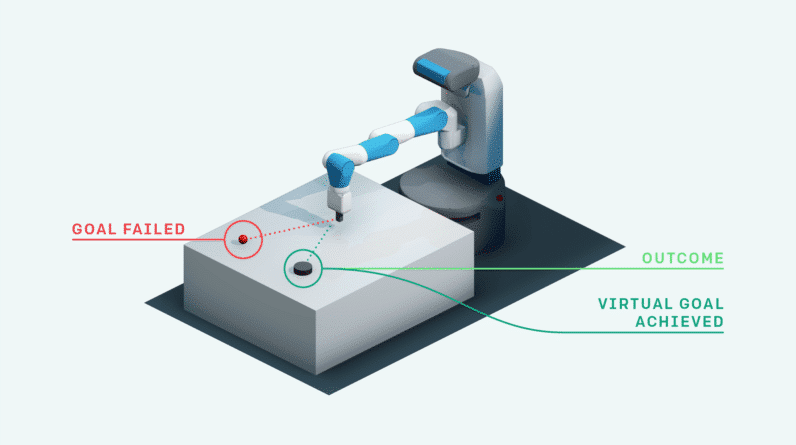

Algorithmic bias can emerge due to the design and implementation of the AI algorithms. Bias can be unintentionally introduced through the choice of features, the training process, or even the assumptions made during algorithm development. Developing transparent algorithms that are scrutinized for potential biases is crucial. By ensuring algorithmic fairness, developers can mitigate the unintended consequences of biased algorithms and enable systems that treat all individuals impartially.

Unintended Bias

Unintended bias occurs when AI systems make discriminatory decisions based on attributes that are unrelated to the outcome being predicted. These biases may be subtle and emerge from complex interactions within the data or algorithms. Detecting and correcting unintended bias requires a deep understanding of the underlying mechanisms and continuous monitoring of AI systems. Ongoing research efforts aim to develop techniques that can minimize unintended biases, ensuring fair and neutral outcomes.

Subjectivity in Fairness

Defining fairness is a subjective and multifaceted concept that varies across cultures, contexts, and individuals. Different stakeholders may have divergent opinions on what constitutes fair outcomes. Balancing competing notions of fairness can be challenging, as there may be inherent trade-offs. Addressing subjectivity in fairness requires transparent decision-making processes, involving diverse perspectives, and incorporating ethical considerations. Engaging in ongoing dialogue and collaboration can help establish common ground and develop AI systems that strive for fairness and neutrality.

Addressing Data Bias

Addressing data bias is crucial to ensure the fairness and neutrality of AI systems. Several strategies can be employed to mitigate data bias and promote inclusivity.

Data Collection and Sampling

Data collection and sampling techniques play a critical role in addressing data bias. Ensuring that the training data encompasses diverse perspectives, experiences, and demographics can help minimize biases that may be present in the data. Care should be taken to collect data that represents different groups, ensuring that their voices are not marginalized or excluded. Randomized sampling methods and careful consideration of potential biases during data collection can contribute to more inclusive and representative datasets.

Diverse and Representative Data

Building fair and neutral AI systems necessitates the use of diverse and representative data. By integrating data from multiple sources and perspectives, AI models can be developed that better reflect the complex realities and diversity of society. Data diversity ensures that outcomes are not inadvertently skewed towards certain groups or biased towards specific attributes. Including data from underrepresented communities and marginalized groups is particularly important to address historical disparities and prevent discriminatory outcomes.

Cleaning and Preprocessing Techniques

Data cleaning and preprocessing techniques are instrumental in minimizing data bias. These techniques involve identifying and addressing unwanted biases, errors, and inconsistencies in the data. By carefully analyzing the data and removing any biases or noise, developers can enhance the quality and integrity of the training dataset. Applying statistical methods, data augmentation, and algorithmic techniques can help mitigate data bias and ensure that AI systems are built on unbiased and reliable data.

Mitigating Algorithm Bias

Ensuring algorithmic fairness is crucial to address bias in AI systems. Developers must adopt strategies to minimize algorithmic bias and promote impartial decision-making.

Transparent Algorithm Design

Transparent algorithm design is essential to identify and minimize algorithmic bias. Developers should document and disclose the design principles, decision-making processes, and assumptions underlying their algorithms. By providing transparency and accountability, developers can enable others to scrutinize and evaluate the potential biases in the algorithm. Increased transparency promotes public trust, allows for external audits, and fosters continuous improvement of AI systems.

Unbiased Model Evaluation

Evaluating AI models for fairness requires the development of metrics and evaluation methodologies that account for potential biases. Developers need to assess the models for disparities across different groups and investigate any significant biases or skewed outcomes. Unbiased model evaluation involves carefully selecting evaluation datasets, conducting fairness audits, and analyzing the impact of the AI system on different demographic groups. Evaluating AI models for fairness allows developers to identify and rectify any biases, improving the overall fairness and neutrality of the system.

Algorithmic Fairness Techniques

Developing and implementing algorithmic fairness techniques can help mitigate bias in AI systems. These techniques focus on designing algorithms that explicitly consider fairness metrics and prevent discrimination. Fairness-aware algorithms aim to minimize disparate treatment, meaning that the system should not make systematically worse predictions or decisions for certain groups. Implementing algorithmic fairness techniques involves considering counterfactual fairness, individual fairness, and group fairness principles, among others. By integrating these techniques, developers can promote fair and neutral outcomes for everyone.

Unintended Bias and Its Impact

Unintended bias can have a significant impact on the fairness and neutrality of AI systems. Understanding and addressing unintended bias is critical to building AI systems that minimize discriminatory practices.

Understanding Unintended Bias

Unintended bias can arise from subtle interactions within the data or algorithms, leading to biased outcomes. These biases are often unconscious and unintended, resulting from complex patterns or hidden correlations in the data. They can affect decisions related to lending, hiring, criminal justice, and other areas of critical importance. Understanding unintended bias requires thorough analysis of the training data, testing for biases during algorithm development, and ongoing monitoring of the AI system. By identifying and unpacking unintended bias, developers can take appropriate measures to mitigate its impact.

Detecting and Correcting Unintended Bias

Detecting and correcting unintended bias is a multifaceted challenge. It requires developing techniques that can identify and quantify biases within the AI system. Researchers are actively working on developing algorithms that can detect hidden biases and provide insights into their origins. Correcting unintended bias involves addressing the root causes of bias, such as biased training data or flawed algorithms. This may involve refining the data collection and preprocessing methods, retraining the AI models, or implementing algorithmic techniques specifically aimed at reducing bias. By continuously monitoring, detecting, and correcting unintended bias, developers can build AI systems that are more fair, neutral, and reliable.

The Role of Human Judgment

Human oversight plays a crucial role in AI development to ensure fairness and neutrality. It is essential to consider the subjectivity in human decision-making and explore ways to address it effectively.

Human Oversight in AI Development

Human oversight is essential throughout all stages of AI development to identify and rectify biases. Humans can bring contextual knowledge, moral reasoning, and critical thinking to the decision-making processes involved in AI development. By involving diverse experts and stakeholders, developers can challenge assumptions, detect potential biases, and ensure that AI systems align with ethical standards and societal values. Human oversight also includes transparent reporting and documentation of the AI development process, enabling external scrutiny and accountability.

Addressing Subjectivity in Human Decision-making

Addressing subjectivity in human decision-making is a crucial aspect of ensuring fairness and neutrality in AI systems. Humans may inadvertently introduce their own biases into the AI development process, either consciously or unconsciously. It is essential to take steps to address these biases through training, education, and awareness programs. Promoting diversity and inclusivity in the AI development teams can also help minimize individual biases. Applying techniques such as cross-validation, external reviews, and ethical guidelines can assist in reducing subjective biases and promoting impartial decision-making.

Regulatory Frameworks and Guidelines

Regulatory frameworks and guidelines play a vital role in shaping the development and use of AI systems. They provide guidance and standards to ensure that AI systems are built with fairness, neutrality, and ethical considerations in mind.

Legal and Ethical Considerations

Legal and ethical considerations form the foundation of regulatory frameworks for AI. Laws and regulations aim to protect individuals’ rights, prevent discriminatory practices, and ensure transparency and accountability in AI systems. Ethical guidelines provide guiding principles for developers and users of AI systems, emphasizing fairness, inclusion, and the promotion of societal well-being. Legal and ethical considerations differ across jurisdictions but commonly address issues such as privacy, bias, explainability, and consent. By adhering to legal and ethical considerations, developers can build AI systems that prioritize fairness, neutrality, and respect for human rights.

Existing Regulations on Bias in AI

Several countries and international organizations have started enacting regulations focused on addressing bias in AI. For example, the General Data Protection Regulation (GDPR) in Europe outlines principles for lawful, fair, and transparent data processing. The Algorithmic Accountability Act in the United States aims to establish guidelines for fairness and accountability in automated decision-making systems. Organizations such as the Partnership on AI and the IEEE have also developed guidelines and best practices in the field of AI ethics. These regulations and guidelines play a pivotal role in creating an environment that fosters fair and neutral AI systems.

Collaboration and Accountability

Addressing bias in AI requires collaboration and accountability across various stakeholders involved in AI development and deployment.

Industry Collaboration to Tackle Bias

Industry collaboration is crucial to address bias in AI effectively. By sharing best practices, knowledge, and resources, organizations can collectively work towards minimizing biases and promoting fairness in AI systems. Collaborative initiatives allow for the pooling of expertise and diverse perspectives, leading to more comprehensive solutions. Partnerships between academia, industry, and government entities enable the development of tools, methodologies, and frameworks that tackle bias in AI from multiple angles. The collective effort of industry collaboration is essential in driving the progress of fair and neutral AI systems.

Accountability in AI Development

Accountability is a key aspect of ensuring fairness and neutrality in AI systems. Developers and organizations must take responsibility for the outcomes of their AI systems and be accountable for the biases that may arise. This accountability involves transparency in the decision-making processes, disclosing potential biases, and being open to external audits and scrutiny. Establishing clear lines of responsibility and accountability helps address biases promptly and facilitates continuous improvement in AI systems. Organizations should also establish internal mechanisms that allow for feedback, reporting, and appeals from affected individuals, further enhancing accountability.

Towards Fair and Neutral AI Systems

Building fair and neutral AI systems requires continuous effort, monitoring, and education to ensure that biases are minimized and ethical standards are upheld.

Continuous Monitoring and Auditing

Continuous monitoring and auditing are essential to detect and rectify biases in AI systems. By regularly evaluating the performance of AI models, monitoring their impact on different groups, and conducting fairness audits, developers can identify and address biases in a timely manner. Ongoing monitoring ensures that AI systems remain fair and neutral throughout their lifecycle, adapting to evolving societal values and mitigating potential biases that may emerge.

Education and Awareness on Bias

Education and awareness play a pivotal role in addressing bias in AI. Developers, policymakers, and users of AI systems need to understand the ethical implications, challenges, and potential risks associated with bias. Training programs, workshops, and educational initiatives can help raise awareness about bias in AI and equip individuals with the tools to recognize and address biases. By fostering a culture of ethical responsibility, organizations can ensure that bias is proactively assessed and mitigated throughout the AI development process.

Ethical Responsibility of AI Developers

AI developers bear the ethical responsibility to build fair and neutral AI systems. It is their duty to prioritize fairness, minimize biases, and ensure that AI models treat all individuals equitably. This requires ongoing education, staying up-to-date with the latest research on bias mitigation techniques, and fostering a commitment to upholding ethical principles. Developers should actively engage in continuous learning, collaboration, and accountability to build AI systems that align with societal values and contribute to a more equitable world.

In conclusion, bias in AI presents a significant challenge in building fair and neutral systems. Understanding the various forms of bias and their impact is crucial to address these challenges comprehensively. By recognizing the need for fair and neutral AI systems, actively mitigating bias in data and algorithms, promoting human oversight, adhering to regulatory frameworks and ethical guidelines, collaborating with industry peers, and embracing accountability, we can move towards AI systems that are fair, neutral, and aligned with our values. By striving for fairness and neutrality in AI, we can harness its potential to create a more equitable and inclusive future.