In the digital age, artificial intelligence (AI) has emerged as a powerful tool that enables companies to collect vast amounts of personal data. However, as the potential of AI grows, so does the concern over privacy and individual rights. Finding the delicate balance between efficient data collection and safeguarding personal information has become a pressing challenge for both businesses and society as a whole. This article explores the intersection of AI and privacy, highlighting the need for ethical considerations in data collection practices to protect the rights and interests of individuals.

The Role of AI in Data Collection

Artificial Intelligence (AI) has significantly transformed various aspects of our lives, including data collection. With the advent of machine learning and AI technologies, data collection has become more efficient, accurate, and widespread. AI plays a crucial role in processing vast amounts of data, drawing insights, and automating decision-making processes. However, it is essential to strike a balance between leveraging AI for data collection and safeguarding individual privacy rights.

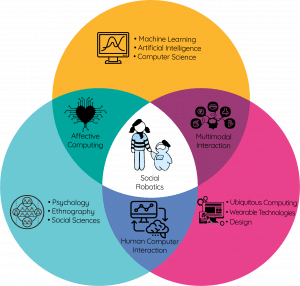

Machine Learning and Artificial Intelligence

Machine learning, a subset of AI, empowers systems to analyze vast amounts of data and identify patterns, enabling them to make predictions and decisions without explicit programming. AI, on the other hand, encompasses a broader range of technologies that simulate human intelligence. These technologies enable machines to understand, learn, reason, and interact with the world, including data collection processes.

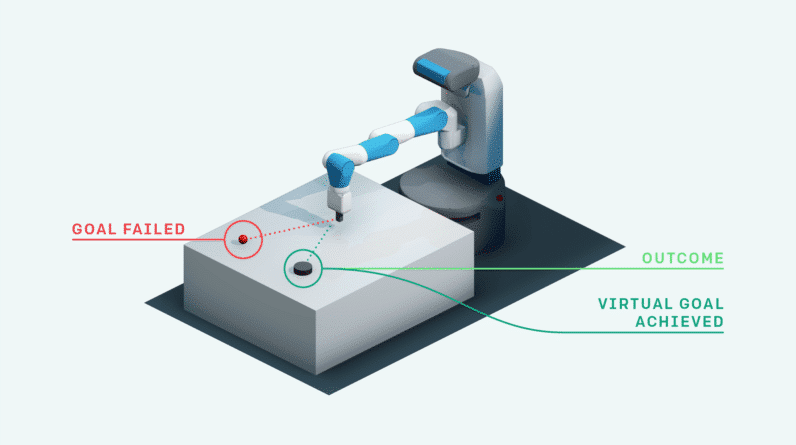

AI-driven algorithms have revolutionized data collection by automating processes that were once time-consuming and labor-intensive. Through machine learning models, these algorithms can analyze large datasets, identify trends and patterns, and derive valuable insights. This automation has not only increased the efficiency of data collection but has also enabled organizations to gather and process data on an unprecedented scale.

Benefits of AI in Data Collection

The use of AI in data collection brings numerous benefits to various industries and sectors. Firstly, AI enables organizations to collect and analyze data in real-time, providing them with up-to-date and accurate information. This timeliness is particularly crucial in fields such as finance, healthcare, and marketing, where real-time data can drive better decision-making.

AI also enhances data accuracy by reducing human errors and biases in data collection processes. By using machine learning algorithms, organizations can minimize the risks of human error and ensure consistency in data collection. This improved accuracy enhances the reliability and validity of the insights derived from the data.

Furthermore, AI can handle large volumes of data at a much faster pace than humans, enabling organizations to process vast amounts of information efficiently. This capability not only saves time and resources but also allows for more comprehensive and comprehensive data analysis.

Risks and Concerns

While AI has transformed data collection, it also raises significant risks and concerns, particularly in terms of privacy and individual rights. The collection and analysis of personal data by AI systems have the potential to infringe upon an individual’s privacy. As AI algorithms become more advanced, there is an increased risk of unauthorized access, misuse, and abuse of personal information.

Data breaches and security incidents are a growing concern in the age of AI. Organizations must not only safeguard the data they collect but also ensure that the AI systems used for data collection and analysis are secure. Any vulnerabilities in these systems could lead to the unauthorized disclosure or manipulation of personal information.

Another concern is the potential for discrimination in AI-driven data collection processes. If AI algorithms are trained on biased or incomplete data, they may perpetuate or amplify societal biases. This can result in unfair treatment or discrimination against certain individuals or groups. It is, therefore, crucial to ensure that AI systems used for data collection are designed and trained with fairness and impartiality in mind.

Additionally, the widespread use of AI for data collection raises concerns about surveillance and informed consent. Individuals may not always be aware that their data is being collected, analyzed, and used for various purposes. This lack of transparency and consent can erode trust and undermine individual rights. Organizations must prioritize transparency and informed consent to respect individuals’ privacy and autonomy.

Understanding Privacy and Individual Rights

To comprehend the impact of AI on privacy, it is essential to understand the concept of privacy and the relevance of individual rights in the digital age.

Definition of Privacy

Privacy is a fundamental human right that encompasses the ability to control and protect one’s personal information, actions, and communications. It involves the right to be left alone and to determine when, how, and to what extent personal information is shared with others. Privacy is crucial for maintaining dignity, autonomy, and individuality in a society driven by technological advancements.

Relevance of Individual Rights

Individual rights are essential to protect individuals from undue interference or intrusion by external entities, including governments, organizations, and AI systems. These rights include the right to privacy, freedom of expression, freedom of thought, and freedom from discrimination. In the context of AI and data collection, individual rights are vital to ensure that individuals have control over their personal information and are protected from any potential harm or misuse.

Challenges in the Digital Age

In the digital age, protecting individual rights and privacy has become increasingly challenging. The proliferation of digital technologies has led to the generation and collection of massive amounts of data, often without individuals’ explicit consent or awareness. Additionally, the interconnected nature of the digital world has made it difficult to regulate and protect personal information effectively.

AI systems, with their ability to collect, analyze, and derive insights from vast amounts of data, have further compounded these challenges. The complex algorithms used in AI systems may require access to extensive datasets, including personal information, to generate accurate and meaningful results. Balancing the need for data with the protection of individual rights presents a significant challenge in the era of AI and data collection.

Data Collection in AI Systems

AI systems rely heavily on data to function effectively. Understanding the types of data collected, methods of data collection, and the scale at which data collection occurs is crucial in assessing the implications for individual privacy.

Types of Data Collected

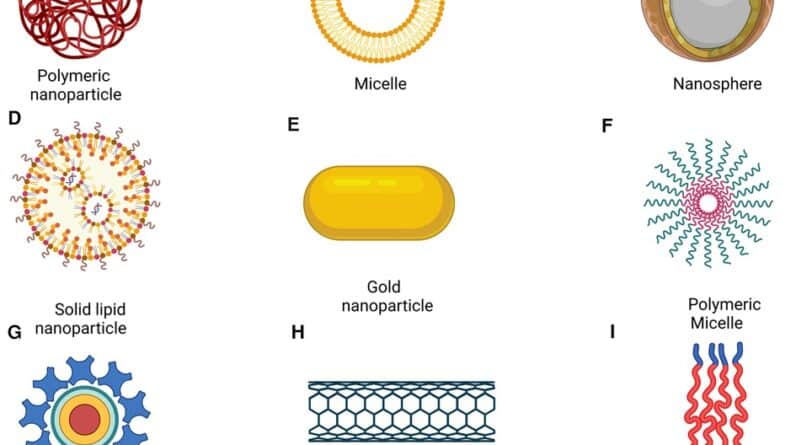

AI systems collect various types of data to train their algorithms and generate insights. These include personal data such as names, addresses, contact information, and financial records. AI systems may also collect sensitive data, including health information, biometric data, and preferences.

In addition to personal and sensitive data, AI systems also collect metadata, which provides information about the data itself. This includes details such as the time and date of data collection, the source of the data, and any relevant contextual information. Metadata is valuable for understanding the context and quality of the data being collected.

Methods of Data Collection

AI systems employ various methods to collect data, including both active and passive means. Active data collection involves individuals willingly providing their information through forms, surveys, or interactions with AI systems. Passive data collection, on the other hand, occurs without individuals’ direct involvement, often through the use of tracking technologies or sensors.

Active data collection methods can include explicit consent processes, where individuals provide their information willingly after being informed about the intended use and potential consequences. Passive data collection, however, presents challenges as individuals may not be aware that their information is being collected or the extent to which their activities are being monitored.

Scope and Scale of Data Collection

AI systems have the potential to collect data on an unprecedented scale. With the ability to process and analyze vast amounts of information, AI systems can gather data from multiple sources, including online platforms, social media, IoT devices, and surveillance systems. This scope and scale of data collection raise concerns about the potential for excessive surveillance, data breaches, and the erosion of privacy.

Organizations must ensure that the data collected through AI systems is proportionate and necessary for achieving the intended goals. The principle of data minimization becomes crucial in determining the appropriate scope and scale of data collection. Collecting only the data that is necessary and relevant can help mitigate privacy risks and safeguard individual rights.

Implications for Individual Privacy

The widespread use of AI for data collection has significant implications for individual privacy. Understanding these implications is essential in addressing the potential risks and concerns associated with the use of AI in data collection.

Loss of Control over Personal Information

AI systems may collect, analyze, and utilize personal information without individuals’ explicit consent or awareness. This loss of control over personal information can lead to a sense of vulnerability and undermine individuals’ trust in AI systems. Organizations must prioritize transparency and provide individuals with meaningful control over their personal data to address these concerns.

Potential for Discrimination

If AI algorithms are trained on biased or incomplete datasets, they may perpetuate or amplify existing biases, leading to unfair treatment or discrimination. For example, biased algorithms used in hiring processes may inadvertently discriminate against certain groups. Organizations must ensure that AI systems are developed and trained with fairness, impartiality, and diversity in mind to prevent discriminatory practices.

Surveillance and Informed Consent

The extensive data collection capabilities of AI systems can lead to increased surveillance. Individuals may not always be aware of the extent to which their activities are being monitored or analyzed. Organizations must prioritize transparency, informing individuals about the data collection processes, purposes, and potential consequences. Obtaining informed consent becomes crucial to respect individuals’ privacy rights and autonomy.

Legal Framework for Protecting Privacy

To protect privacy rights in the context of AI and data collection, various legal frameworks and regulations have been established. These frameworks provide guidelines and requirements for organizations to ensure the responsible and ethical use of AI technologies.

Data Protection Laws and Regulations

Data protection laws, such as the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the United States, outline the rights of individuals and the obligations of organizations regarding the collection, use, and storage of personal data. These laws require organizations to obtain consent, provide individuals with transparency, and implement appropriate security measures to protect personal information.

The Role of Consent and Anonymization

Consent plays a crucial role in data collection processes involving personal information. Organizations must obtain the clear and informed consent of individuals before collecting their data, and individuals must have the ability to withdraw their consent at any time. Anonymization techniques can also be employed to ensure that personal data is de-identified and cannot be linked back to individuals.

Enforcement and Compliance

To ensure compliance with data protection laws and regulations, regulatory bodies and authorities have been established. These entities are responsible for monitoring and enforcing compliance, investigating data breaches, and imposing penalties for non-compliance. Organizations must prioritize compliance with legal requirements and work closely with these regulatory bodies to uphold privacy rights and protect individuals’ personal information.

Ethical Considerations in AI and Privacy

In addition to legal frameworks, ethical considerations must guide the use of AI in data collection. Ethical practices ensure responsible and fair use of AI technologies, taking into account social, cultural, and individual values.

Transparency and Accountability

Transparency is crucial in building trust and ensuring individuals are aware of how their data is being collected, used, and stored. Organizations must provide clear and accessible information about their data collection practices and be accountable for their actions and decisions related to AI systems. Transparent communication fosters trust and enables individuals to make informed choices regarding their privacy.

Fairness and Bias

AI systems must be developed and trained with fairness in mind. Organizations must ensure that the data used to train AI algorithms is diverse, representative, and free from biases. Regular audits and checks must be conducted to identify and address any biases that may exist in AI systems. Fairness ensures equal treatment and opportunities for all individuals, regardless of their demographic characteristics.

Trust and User Empowerment

Building trust is essential in maintaining a privacy-focused AI ecosystem. Organizations must prioritize user empowerment by providing individuals with tools and mechanisms to control their personal information. This can include user-friendly consent management systems, privacy settings, and clear information on how data is used. Empowered users have greater control over their privacy and can make informed choices about their data.

Technological Solutions for Privacy

Technological advancements can offer solutions to address privacy concerns associated with AI and data collection. Privacy-preserving techniques, decentralized data management, and blockchain technology can enhance privacy protection and provide individuals with more control over their personal information.

Privacy-Preserving AI Techniques

Privacy-preserving AI techniques, such as federated learning and differential privacy, aim to protect individual privacy while allowing for collaborative data analysis. These techniques enable AI models to be trained on decentralized data sources without the need for transferring or sharing raw data. By minimizing the exposure of personal information, privacy-preserving AI techniques mitigate privacy risks and ensure confidentiality.

Decentralized Data Management

Decentralized data management frameworks, such as personal data stores and virtual personal assistants, enable individuals to retain ownership and control over their personal information. These frameworks allow individuals to securely manage their data and selectively share it with trusted entities. Decentralization ensures that individuals have full visibility and control over how their data is used and shared.

Blockchain and Privacy

Blockchain technology offers potential solutions to privacy challenges in AI and data collection. By leveraging blockchain’s decentralized and transparent nature, organizations can ensure the immutability and integrity of personal data. Blockchain-based systems enable individuals to maintain control over their data, track its use, and control access through smart contracts. The use of blockchain technology enhances transparency, trust, and data security in the context of data collection.

Industry and Government Initiatives

The responsibility of addressing privacy concerns associated with AI and data collection extends beyond individual organizations. Industry and government initiatives play a crucial role in establishing privacy standards, regulations, and best practices.

Privacy Standards and Best Practices

Industry associations and organizations have developed privacy standards and best practices that help organizations navigate the complex landscape of AI and data collection. These standards provide guidance on ethical data collection, storage, and use, emphasizing the importance of transparency, accountability, and user empowerment. Adhering to these standards allows organizations to demonstrate their commitment to privacy protection.

Government Regulation and Oversight

Governments worldwide are recognizing the need for regulation and oversight in AI and data collection. Regulatory bodies are being established to ensure compliance with data protection laws and address emerging privacy challenges. Governments and regulatory bodies work in collaboration with industry stakeholders to develop rules and regulations that strike the right balance between innovation and privacy protection. Government oversight plays a crucial role in safeguarding individual privacy rights and holding organizations accountable for their data collection practices.

Collaboration and Knowledge Sharing

Collaboration and knowledge sharing across industry, academia, and governments are essential in addressing privacy concerns associated with AI and data collection. By sharing best practices, research findings, and lessons learned, stakeholders can collectively develop frameworks that prioritize individual privacy while fostering innovation. Collaboration encourages the development of shared norms and values that guide responsible data collection practices across various sectors.

Building a Privacy-Focused AI Ecosystem

Building a privacy-focused AI ecosystem requires a holistic approach that incorporates privacy by design principles, user education, and striking a balance between innovation and privacy protection.

Privacy by Design

Privacy by design is an approach that embeds privacy into the design and development of AI systems from the outset. Organizations must consider privacy implications at every stage of the AI system lifecycle, including data collection, storage, and use. By implementing privacy-enhancing technologies and practices, organizations can minimize privacy risks and prioritize individual privacy and rights.

User Education and Awareness

Educating users about their rights, privacy risks, and the implications of data collection is crucial in empowering individuals to make informed choices. Organizations must provide accessible and user-friendly information about their data collection processes, the purposes of data collection, and the measures taken to protect personal information. User awareness campaigns and educational initiatives can promote privacy-conscious behaviors and foster a culture of privacy.

Balancing Innovation with Privacy

Striking the right balance between innovation and privacy is a challenge that organizations and policymakers must navigate. While AI and data collection offer immense potential, individual rights and privacy should not be compromised. Organizations must prioritize responsible and ethical data collection practices, ensuring that privacy is not an afterthought but an integral part of the AI ecosystem. Collaborative efforts between industry, government, and civil society are vital in shaping policies and practices that enable innovation while upholding privacy rights.

The Future of AI and Privacy

As AI continues to evolve, so do the challenges and implications for individual privacy. Understanding these implications is crucial in shaping the future of AI and privacy.

Emerging Technologies and Privacy Implications

Emerging technologies such as facial recognition, emotion detection, and biometric data analysis present new privacy challenges. Organizations must navigate the ethical considerations associated with these technologies and prioritize privacy protection in their design and implementation. Continuous research and development are necessary to understand and address the potential privacy risks of emerging AI applications accurately.

Inclusion and Representation

AI systems must be developed and trained on diverse and representative datasets to ensure fairness and avoid discrimination. It is crucial to include individuals from different backgrounds, cultures, and demographics in the development and training processes to mitigate biases and ensure equitable treatment. Inclusion and representation in AI systems are essential to building a future where individual privacy is respected and upheld.

Constant Evolution of Privacy Challenges

Privacy challenges associated with AI and data collection are far from static. As technology evolves, new privacy risks and concerns will emerge. Organizations must proactively address these challenges by staying abreast of emerging trends, working closely with regulatory bodies, and fostering strong privacy cultures. Privacy-conscious practices and technologies must adapt and evolve alongside advancements in AI to maintain trust in the digital age.

In conclusion, AI has revolutionized data collection processes, offering unprecedented efficiency and insights. However, it is crucial to balance the benefits of AI with individual privacy rights. The responsible and ethical use of AI in data collection requires transparency, accountability, and user empowerment. Legal frameworks, ethical considerations, and technological solutions play crucial roles in protecting privacy in the AI era. Building a privacy-focused AI ecosystem requires collaboration, education, and a future-oriented mindset. By addressing the risks and implications associated with AI and privacy, we can ensure a future where innovation and individual rights go hand in hand.